Synthetic Data Explosion: How 2026 Reduces Data Costs by 70%

In every industry, AI projects now rise or fall on one simple question: Do you have the correct data at the right cost?

Collecting, cleaning, labeling, securing, and governing high-quality data has become one of the largest line items in digital transformation budgets. Teams spend months negotiating data access, anonymizing sensitive information, and navigating compliance, often before a single model goes into production. In many organizations, data work quietly consumes more money than the models themselves.

Synthetic data changes that equation. Instead of waiting for real-world data to accumulate or paying steeply for it, you generate realistic, statistically faithful datasets on demand. Analysts and data scientists can simulate customer journeys, financial transactions, clinical scenarios, and edge-case failures in hours, not quarters.

Over the last two years, synthetic data has moved from a niche technique to a mainstream capability. It is expected that synthetic datasets will surpass real data in AI model training by 2030, positioning them as a core enabler for advanced AI programs. At the same time, practitioners highlight very concrete benefits: lower labeling costs, faster development cycles, and fewer privacy bottlenecks. Synthetic data sits at the intersection of cost, speed, and compliance.

This blog explores why 2026 will be the year synthetic data goes from experimental to essential, and how organizations can realistically cut data-related costs by up to 70% while improving AI performance and governance. This blog will discuss the economics of synthetic data, the specific cost levers it enables, real-world use cases, risk and quality considerations, and a practical roadmap that leaders at Cogent Infotech’s clients can use to prepare for this shift.

Why data costs spiral out of control in AI programs

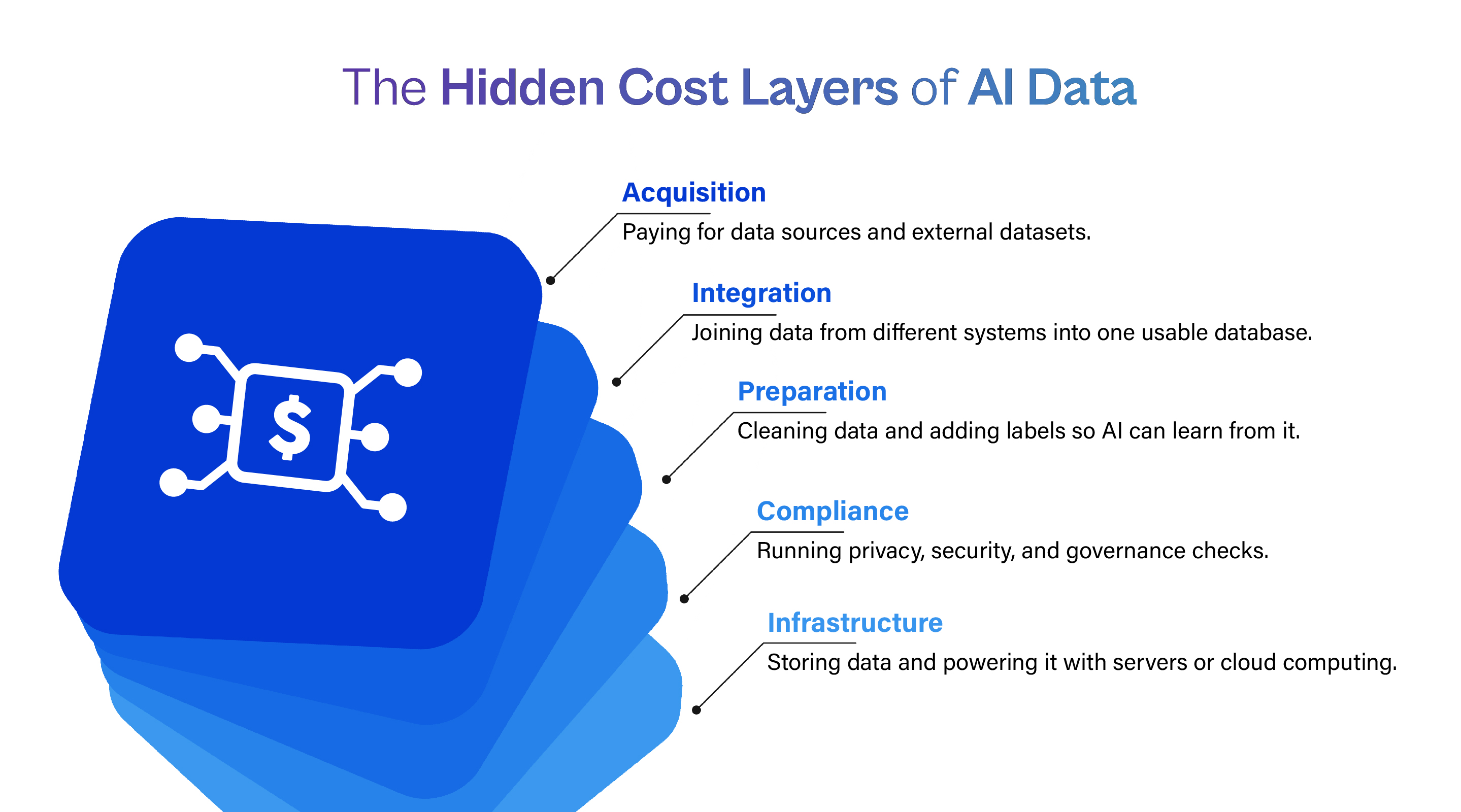

Before we talk about savings, it helps to unpack where the money actually goes in a “data-hungry” AI initiative.

The real cost of “having data.”

When leaders say, “we have a lot of data,” they usually mean raw logs, forms, transaction tables, or documents sitting in different systems. Turning that raw material into model-ready datasets creates several cost drivers:

- Data acquisition and integration

- Buying external data sets

- Ingesting data from multiple operational systems

- Building and maintaining pipelines into data warehouses or lakehouses

- Cleaning, transformation, and labeling

- Removing duplicates, correcting formats, and handling missing values

- Manually labeling images, transactions, or text for supervised learning

- Re-labeling or re-sampling data when requirements change

- Security, privacy, and compliance

- Anonymizing or pseudonymizing personally identifiable information

- Conducting privacy and security reviews before data sharing

- Implementing controls to satisfy regulations like GDPR, HIPAA, or new AI regulations

- Storage, compute, and experimentation

- Hosting large datasets in the cloud

- Running repeated training cycles on expanding datasets

- Storing snapshots for auditability and reproducibility

Many professionals working in AI and analytics observe that data scientists often devote the majority of their time to preparing and labeling datasets instead of focusing on model development and refinement. This imbalance increases not only direct labor expenses but also hidden costs, as valuable talent spends less time generating innovation and more time managing data preparation tasks.

As organizations expand their AI initiatives, each new application, whether it involves fraud detection, customer retention, logistics optimization, or personalized experiences, introduces additional data demands. These requirements rapidly multiply as teams seek larger volumes, more detailed labeling, and better representation of rare but critical scenarios, causing overall costs to rise at an accelerating pace.

Why traditional cost-cutting doesn’t go far enough

Organizations have attempted to control these costs by:

- Centralizing data in modern platforms

- Implementing stronger data governance

- Automating parts of data preparation

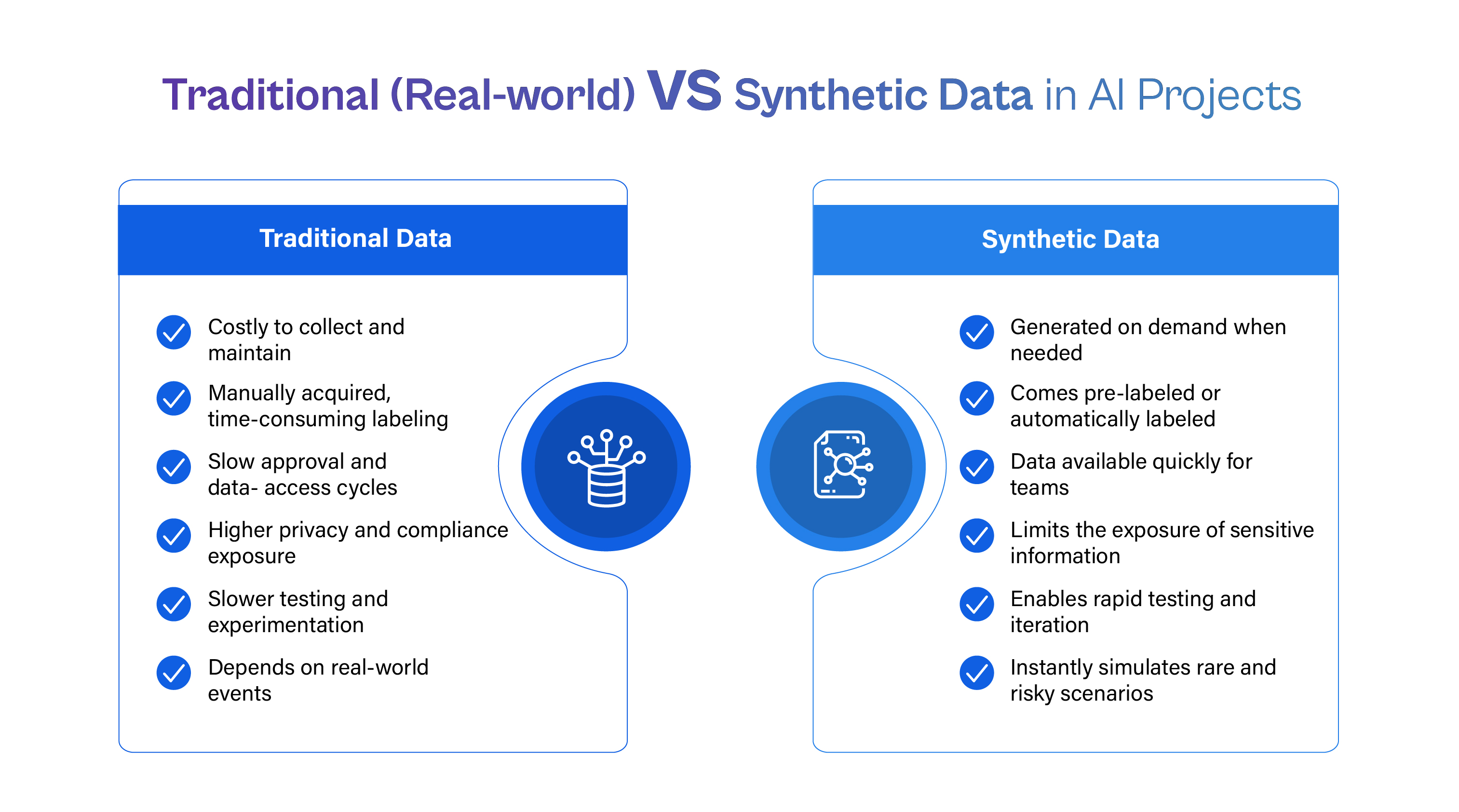

These moves help, but they don’t change a fundamental reality: real-world data remains slow, expensive, and constrained.

You still need time to collect enough examples. You still negotiate access and usage rights. You still face privacy reviews for every new sharing scenario. And you still struggle with rarely observed but business-critical edge cases, like fraud, outages, or safety incidents.

That’s why synthetic data is so powerful. It doesn’t just make existing processes more efficient; it rewrites the process.

2026: The synthetic data tipping point

Synthetic data is not new. Simulation, synthetic records, and model-based augmentation have existed for decades. What changes the game now is generative AI and enterprise-grade synthetic data platforms that can scale across use cases.

From an interesting experiment to an AI backbone

Gartner has described synthetic data as a “must-have” for the future of AI, noting that organizations increasingly rely on it when real data is expensive, biased, or regulated.

More recently, industry analyses report that a majority of the data used in specific AI applications is already synthetic, with some estimates suggesting that over 60% of the data used in AI applications in 2024 was synthetically generated or augmented.

A detailed 2025 article on synthetic data in enterprise AI points out that:

- Gartner expects synthetic data to make up roughly three-quarters of data used in AI projects by 2026, and

- By 2030, synthetic data is likely to “completely overshadow” real data in AI model training.

When you combine those adoption curves with the cost and privacy pressures CIOs face, 2026 looks less like a gradual evolution and more like an inflection point, synthetic data shifts from an optional accelerator to a foundational capability.

The 70% figure: what it actually means

You will see different “70%” statistics in the synthetic data conversation, and they cluster around a few themes:

- Gartner-linked analysis suggests that using synthetic data and transfer learning can reduce the volume of real data needed for machine learning by about 70%, and can cut exposure to privacy-violation sanctions by a similar margin.

- Business-oriented technology providers report that synthetic data adoption can deliver up to 70% cost reduction across data preparation, testing, and development when compared with traditional real-data pipelines.

Taken together, these figures don’t claim that all AI program costs drop by 70%. Instead, they indicate that the portions of your AI spend tied directly to data acquisition, labeling, and privacy handling can shrink by roughly two-thirds when you adopt synthetic data strategically.

If data-related work represents 40–60% of your AI budget, which is common in complex enterprises,s then a 70% reduction in that slice translates into roughly 25–35% savings in total AI program costs, while also unlocking speed and flexibility.

How synthetic data actually reduces costs

Let’s break down the main levers that synthetic data pulls to reach that “up to 70%” reduction in data costs.

Cutting data acquisition and labeling spend

In many industries, the most expensive part of an AI project is not model training; it’s the data itself:

- Buying third-party datasets

- Running large-scale surveys or studies

- Paying annotators to label millions of images, transactions, or text records

Recent enterprise observations highlight a stark contrast between traditional data preparation and synthetic alternatives. Manually labeling just one image can cost several dollars, while generating a similar synthetic version that already includes accurate labels typically costs only a fraction of that amount. When this difference plays out across thousands or millions of data points, the financial impact becomes significant.

Business technology analysts also emphasize the broad advantages synthetic data offers. Organizations adopting it often achieve substantial reductions in data-related expenses, accelerate preparation timelines, and strengthen privacy compliance. This combination allows teams to reduce reliance on expensive real-world datasets while maintaining performance and enabling more responsible, large-scale innovation.

Concretely, synthetic data helps you:

- Generate fully labeled datasets on demand.

- Instead of paying annotators per record, your generator produces both the data and the labels in one step.

- Reuse generative models across projects.

- Once you train a domain-specific generative model, say, for retail transactions, you can produce new datasets for multiple teams with marginal costs close to zero.

- Avoid repeated “study costs.”

- Rather than running a new survey or trial for every variation, you simulate scenarios with synthetic respondents or records.

Accelerating time-to-model and slashing development costs

Time is money, especially in AI programs where long lead times erode ROI.

In financial services, organizations using synthetic data to navigate regulatory constraints report a 40–60% reduction in model development time, since teams no longer wait months for approvals and data provisioning before starting experiments.

Shorter development timelines create multiple cost benefits:

- Fewer idle specialists waiting for data

- Faster iteration cycles and more experiments per budget cycle

- Earlier detection of unviable ideas, which avoids sunk costs

- Quicker path from proof-of-concept to production value

By 2026, as synthetic data platforms mature, the pattern will look familiar: teams that previously spent half their time arguing with data pipelines will spend that time improving models and delivering business impact.

Reducing privacy, security, and compliance overhead

Every time a team requests access to sensitive customer, patient, or citizen data, a queue forms in legal, compliance, and security. Reviews, anonymization, and risk assessments all cost money.

Healthcare and life sciences clearly demonstrate the financial and operational value of synthetic data. Organizations can recreate key characteristics of patient records without exposing real identities, enabling safe data use for research, testing, and model development while preserving confidentiality.

This shift significantly reduces reliance on real-world patient data and minimizes privacy risks. As synthetic data becomes more integrated into clinical and research workflows, organizations can limit their exposure to regulatory penalties and reduce the volume of sensitive data required, improving both compliance efficiency and cost control.

Those improvements come from:

- Testing and development with synthetic data instead of production records

- Sharing synthetic datasets across teams and regions without exposing personal information

- Reducing the need for bespoke anonymization projects for each new use case

For large enterprises, privacy and security work around data access can consume millions of dollars in staff time and tooling. When you shift a big part of your experimentation and testing portfolio to synthetic data, you compress that entire cost center.

Optimizing infrastructure and experimentation

Real-world data is “happenstance”: it rarely includes edge cases or rare events that your models must handle. Synthetic data lets you deliberately generate high-value, high-signal examples, which means you often need less data to achieve the same or better performance.

Analysts point out that well-designed synthetic datasets allow enterprises to:

- Cover rare events (e.g., fraud, outages, safety incidents) without waiting for them to occur

- Create task-specific datasets instead of copying full production tables

- Run large-scale performance tests by simulating billions of transactions without storing massive real-world logs

Because synthetic datasets can be smaller but richer, you spend less on:

- Long-term storage of historical data

- High-end compute for training on bloated, noisy datasets

- Network transfer as teams move data across environments

You don’t just “do the same work cheaper”; you change the shape of the work.

Where synthetic data already delivers value

By 2026, synthetic data will move far beyond experimental environments and become a central component of mainstream AI operations. Organizations will integrate it directly into production workflows to support training, testing, and validation across key business functions. Instead of being treated as an emerging technique, it will serve as foundational infrastructure for scalable AI systems. This shift will redefine how enterprises approach data generation, model development, and regulatory compliance.

Financial services and insurance

Banks, insurers, and fintechs are subject to some of the strictest data regulations, making them prime adopters of synthetic data.

Industry reports highlight that:

- Financial institutions use synthetic data to simulate fraud patterns and balance skewed datasets, increasing model accuracy without exposing real customer records.

- Synthetic data lets teams sidestep lengthy approvals for using live transactional data in non-production environments, contributing directly to that 40–60% reduction in development timelines.

Result: faster fraud detection systems, better credit risk scoring, and more robust stress testing, delivered with lower compliance and data-ops spend.

Healthcare and life sciences

In healthcare and life sciences, synthetic data now supports:

- Virtual control groups that reduce the number of real patients needed for specific trials

- Cross-institution collaboration where hospitals share synthetic patient datasets instead of raw clinical records

- Exploratory research and early-stage modeling using synthetic cohorts that mirror past trials

These practices help organizations:

- Lower recruitment and data collection costs

- Shorten trial design cycles

- Reduce legal and ethical friction around data sharing

At scale, synthetic clinical data doesn’t just save money; it accelerates time-to-therapy.

Retail, marketing, and customer analytics

Customer-facing industries need rich behavioral data, but they also face intense scrutiny over privacy.

Synthetic data supports:

- Hyper-personalization experiments without exposing real individual histories

- Marketing mix and campaign optimization, where synthetic customer journeys approximate complex real-world behavior

- “What-if” scenario testing for pricing, promotions, and product recommendations

Business technology reports show that organizations using synthetic customer data for analytics and testing achieve up to 70% lower data acquisition costs and significantly faster campaign cycles, because they no longer depend solely on live market research or third-party panels.

Software testing and digital products

Any data-driven application requires test data: banking apps, insurance portals, HR systems, logistics platforms. Traditionally, teams either copy production data (risky) or hand-craft small synthetic samples (incomplete).

Synthetic data has become a powerful asset in software testing, enabling teams to create highly realistic, large-scale datasets that preserve privacy while simulating extreme system loads. This approach allows organizations to evaluate performance, stability, and resilience under conditions that closely resemble real-world usage without relying on sensitive production data. (MIT)

The benefits of this include:

- Lower test-data management costs

- Reduced risk of exposing sensitive production data in lower environments

- Faster regression testing across more scenarios

By 2026, for many enterprises, unit tests and load tests will rely more on synthetic data than on masked production snapshots.

Risk, quality, and governance: doing synthetic data right

Synthetic data offers immense value, but it is not a flawless solution and can create serious problems if applied without care. Poorly designed synthetic datasets may distort real-world patterns, introduce bias, or weaken model accuracy. Leaders must understand these risks clearly and establish strong validation processes to ensure data quality. Effective governance and oversight are essential to ensure synthetic data supports informed, reliable decision-making.

Quality and “reality gaps”

MIT experts note that models trained exclusively on synthetic data may struggle with real-world inputs if teams don’t validate them carefully.

Common issues include:

- Over-simplified distributions that don’t capture real-world variability

- Synthetic edge cases that don’t occur in practice

- Overfitting to the generator’s biases rather than actual domain behavior

Best practices:

- Use hybrid datasets: start with real data, then augment with synthetic samples.

- Evaluate synthetic data with statistical similarity and task-specific metrics.

- Regularly compare model performance on real hold-out datasets to ensure that synthetic training doesn’t degrade real-world accuracy.

Bias and fairness

Synthetic data comes from models trained on real data, which means:

- It can replicate existing bias, such as demographic imbalances or skewed sampling.

- It can also correct bias if teams deliberately generate more balanced synthetic populations.

To use synthetic data responsibly, organizations must:

- Audit source datasets

- Explicitly design synthetic scenarios to cover underrepresented groups

- Monitor downstream models for disparate impact

The cost story doesn’t matter if bias risks create regulatory or reputational damage.

Governance and traceability

As synthetic data usage scales, you need the same governance disciplines you apply to real data:

- Lineage: Which real datasets and models generated this synthetic dataset?

- Purpose limitation: For which use cases is a synthetic dataset approved?

- Access control: Who can access which synthetic datasets, and in what environments?

Modern synthetic data metrics and evaluation tools already help enterprises measure similarity, privacy risk, and utility, but leaders must embed these checks into standard MLOps and data-ops pipelines.

A practical roadmap to 70% lower data costs by 2026

For organizations looking ahead to 2026, the goal isn’t to “replace all data with synthetic.” The goal is to blend synthetic and real data to maximize value while minimizing cost and risk.

Here’s a pragmatic roadmap that aligns with Cogent Infotech’s analytics and AI engagements:

Step 1: Map your data-cost hotspots

Start with a simple question: where does data slow us down or cost us the most?

Typical hotspots:

- Highly regulated domains (healthcare, finance, public sector)

- Projects that depend on external vendors or panels for data

- Use cases with heavy labeling or re-labeling requirements

- Complex integration projects that repeatedly copy production data into test environments

Quantify:

- Annual spend on data acquisition, labeling, and compliance

- Time lost to approvals, privacy reviews, or data wrangling

- Opportunity cost from delayed launches or scaled-back experiments

This baseline makes the 70% reduction target concrete instead of abstract.

Step 2: Identify “synthetic-ready” use cases

Not every problem is well-suited to synthetic data. You get the most benefit when:

- You can define clear statistical patterns or rules for the domain (e.g., transactions, sensor readings, claims, orders).

- You face regulatory or privacy barriers to using real data.

- You need to simulate rare events that real data barely covers.

Good starter use cases:

- Generating test data for new applications

- Simulating fraud or anomaly patterns

- Creating marketing or customer-behavior scenarios

- Building virtual cohorts for healthcare or HR analytics

Step 3: Choose generation techniques and platforms

Depending on your use cases, you might use:

- Rule-based generators for simple tabular datasets

- Simulation and synthetic worlds for physical systems (manufacturing, mobility)

- Generative models (GANs, VAEs, diffusion models, or tabular generative models) for complex structured or unstructured data

Industry reviews emphasize that modern synthetic data platforms now combine these techniques and provide no-code/low-code interfaces for non-experts, lowering adoption barriers and spreading benefits beyond core data-science teams.

Step 4: Build a hybrid synthetic–real data pipeline

For each use case, design a repeatable pattern:

- Start from a curated sample of real data

- Apply governance and privacy checks once at this stage.

- Train a domain-specific generative model or configure rules/simulations

- Generate synthetic datasets tuned to:

- Specific edge cases

- Certain customer segments

- Performance testing volumes

- Validate synthetic data

- Statistical similarity to real data

- Privacy risk metrics

- Task-level performance (e.g., model accuracy on a real hold-out set)

- Deploy synthetic datasets via self-service access

- Give developers, testers, and analysts easy, governed access to synthetic data pools.

This pipeline turns synthetic data from a one-off experiment into an enterprise service.

Step 5: Bake governance, metrics, and ROI tracking into the process

Finally, treat synthetic data as a first-class asset:

- Add synthetic datasets to your data catalog, labeled clearly.

- Define policies for when teams must or must not use synthetic data.

- Track ROI:

- Hours saved per project

- Reduction in labeling/acquisition spend

- Decrease in privacy-review cycles

- Time from idea to production

As you gather results, you can refine the 70% target by domain. Some functions might see 50% savings; others may exceed 70% when synthetic data replaces expensive external panels or specialized studies.

What this means for leaders in 2026

By 2026, forward-thinking organizations will no longer debate whether synthetic data belongs in their AI strategy. Instead, they will focus on how effectively they can integrate it into their data ecosystems to stay competitive.

Leaders will increasingly ask questions such as:

- Where can synthetic data unlock the greatest efficiency and cost advantage?

- How do we manage real and synthetic data together as a unified, governed asset?

- Which teams still depend on slow, high-cost data practices, and how can we modernize them?

Across industries, enterprises already view synthetic data as a strategic capability rather than an experimental add-on. Analysts predict that synthetic datasets will dominate AI model training within the next few years, reshaping how companies think about scale, privacy, and performance. At the same time, real-world implementations demonstrate tangible results, including significant reductions in data preparation costs, faster model development cycles, and lower exposure to privacy risk.

For organizations operating in an environment defined by rapid AI adoption, rising compliance pressure, and constant demand for innovation, synthetic data delivers three decisive advantages:

- It removes bottlenecks that delay AI initiatives due to limited or sensitive real-world data.

- It rebalances budgets by shifting spend away from expensive data collection and toward innovation and optimization.

- It enables safe experimentation at scale, allowing teams to test, fail, refine, and iterate without compromising security or compliance.

Leaders who embrace this shift will move beyond reactive data strategies and toward proactive, cost-efficient, and future-ready AI ecosystems.

Those who delay adoption may still deploy AI solutions, but they will face higher costs, slower timelines, and greater operational friction, all while competitors accelerate.

Conclusion: The economic reset of AI data in 2026

The rise of synthetic data marks a fundamental turning point in how organizations fuel artificial intelligence. For years, real-world data has been the backbone of AI systems, but that reliance has come with escalating costs, privacy constraints, and operational complexity. Synthetic data changes this model entirely. It introduces speed, flexibility, and economic efficiency into a process that once relied on slow and expensive data cycles.

By 2026, the shift will no longer feel experimental. It will feel inevitable. Organizations that adopt synthetic data thoughtfully can reduce data-related costs by up to 70%, accelerate AI development timelines, and expand innovation without increasing compliance risk. More importantly, they gain the freedom to explore possibilities that real-world data cannot support at scale, from rare-event simulation to advanced scenario planning and safer experimentation.

The future of AI does not depend on collecting more data at any cost. It depends on using smarter data, generated with purpose, governed with discipline, and aligned with real-world outcomes. Synthetic data offers that pathway. The question is no longer whether it will transform AI economics, but how quickly organizations choose to harness its full potential.

Ready to cut AI data costs without slowing innovation?

Explore how synthetic data can fit into your AI roadmap. Connect with Cogent Infotech to assess where synthetic data can deliver the fastest cost savings, stronger governance, and measurable ROI for your organization.

Contact Now

%402x.svg)