The XAI Reckoning : Turning Explainability Into a Compliance Requirement by 2026

In 2026, enterprises will face a reckoning in artificial intelligence, not over speed or scale, but over trust. Regulators, auditors, and customers are demanding transparency, fairness, and accountability in automated systems. Explainable AI (XAI) and Trustworthy AI are no longer “nice to have” features; they are becoming compliance requirements across HR, finance, healthcare, and security.

The EU AI Act’s enforcement timelines, U.S. sector‑specific rules, and rising audits for algorithmic fairness all signal the end of the black‑box era. Enterprises that cannot explain their AI decisions risk lawsuits, audit failures, reputational damage, and regulatory penalties. 2026 marks the XAI Reckoning, the tipping point where explainability and trustworthiness shift from optional to mandatory, and where compliance depends on enterprises proving that their AI is transparent, fair, and defensible.

Why 2026 Triggers the XAI Reckoning

2026 is not simply another year in the AI adoption curve. It is the inflection point where explainability becomes a compliance mandate. For years, enterprises have deployed black‑box models with little scrutiny. Regulators, auditors, and customers are now converging on a single demand: transparency. The EU AI Act’s enforcement deadlines, U.S. sector‑specific rules, and the rise of algorithmic audits all collide in 2026, creating a new baseline for enterprise accountability. This is the moment when enterprises must prove that their AI systems are not only powerful but also explainable, fair, and defensible.

EU AI Act enforcement

In 2026, the EU AI Act shifts from policy to full enforcement, making explainability a binding legal requirement for enterprises operating in Europe. High‑risk systems such as healthcare diagnostics, credit scoring, HR decisioning, and public safety applications must provide clear, auditable explanations of how decisions are made. Organizations will be expected to document model lineage, rationale outputs, safeguards, and bias testing results, producing artifacts like model cards and decision logs to prove compliance. Failure to meet these obligations can result in penalties, blocked product launches, or suspension of operations in sensitive sectors. The EU AI Act is the first global framework to explicitly tie explainability to legality, setting a precedent that other jurisdictions are beginning to follow.

U.S. sector‑specific rules

In the United States, regulators are embedding explainability into existing civil rights, consumer protection, and anti‑discrimination laws, creating sector‑specific obligations that enterprises must meet. Healthcare providers must demonstrate how AI triage systems prioritize patients, ensuring that life‑critical decisions are transparent and defensible. Financial institutions are required to explain credit approvals and insurance pricing, offering rationale outputs that regulators and customers can review. Employers must justify automated hiring and promotion decisions, proving that algorithms do not perpetuate bias or discrimination. These requirements are enforceable obligations, not voluntary guidelines, and enterprises that fail to comply risk lawsuits, regulatory fines, reputational damage, and loss of customer trust. By 2026, explainability in the U.S. will become a frontline compliance issue across healthcare, finance, and employment.

Rise of algorithmic audits

By 2026, independent audits for fairness, bias, and provenance will become mandatory across regulated industries, moving from best practice to legal requirement. Regulators in the EU, U.S., and Asia are formalizing audit regimes, with the EU AI Act mandating third‑party conformity assessments for high‑risk systems and U.S. financial regulators piloting algorithmic audit programs for credit scoring and insurance pricing.

These audits now extend beyond technical performance to examine governance structures, data provenance, bias testing, and human oversight policies, requiring enterprises to produce artifacts such as model cards, decision logs, bias testing results, and lineage documentation. A lack of documentation or explainability can lead to audit failures that block product launches, trigger compliance penalties, or halt operations in sensitive sectors like healthcare and finance. The operational and financial impact is significant, as failed audits erode investor confidence and delay market entry, while successful audits increasingly serve as proof of resilience and trustworthiness for customers and stakeholders.

Customer and regulator pressure

By 2026, transparency isn’t just a box to tick for regulators, it’s something customers expect too. People want straightforward answers when they’re denied a loan, passed over for a job, or moved down a healthcare queue. If enterprises can’t explain these decisions in plain language, they risk losing trust and credibility. Regulators are reinforcing this expectation, requiring explanations that non‑technical audiences can actually understand. In practice, this means explainability is both a compliance requirement and a way to keep customers confident and loyal.

Global regulatory convergence

Around the world, governments are moving from guidelines to hard rules. In 2026, countries across Asia, the Middle East, and Latin America are rolling out transparency and risk‑classification mandates that look a lot like the EU AI Act. For global enterprises, this creates overlapping obligations that can’t be ignored. Explainability is no longer a regional issue, it’s a global standard. Multinationals will need to harmonize their compliance strategies so their AI systems meet expectations everywhere they operate.

Severe financial penalties for non‑compliance

The cost of ignoring transparency is steep. Under the EU AI Act, violations can mean fines of up to €35 million or 7% of global annual turnover. That turns explainability from a nice‑to‑have ethical principle into a financial imperative. Enterprises that fail to document and explain their AI decisions risk not only regulatory sanctions but also reputational damage, investor backlash, and operational setbacks. In short, the price of opacity is far higher than the investment needed to build explainability into AI systems.

The Hidden Dangers of Black‑Box AI

Enterprises that continue to rely on opaque, black box AI systems face escalating risks in 2026. These models may deliver powerful predictions, but without transparency they expose organizations to liabilities that can no longer be ignored. Regulators demand auditable explanations, customers expect clarity, and investors question the resilience of systems that cannot be defended in audits or courts. In this environment, transparency is no longer optional. It is the foundation of enterprise legitimacy and competitiveness.

- Legal defensibility gaps: Courts and regulators increasingly demand that enterprises explain how automated decisions are made. Without transparency, organizations cannot defend themselves in lawsuits involving credit denials, hiring rejections, or healthcare triage outcomes. Legal teams are left without evidence to justify decisions, creating exposure to class‑action suits and regulatory penalties.

- High‑risk use cases: Black‑box models are especially dangerous in sensitive domains such as credit scoring, insurance pricing, healthcare triage, and HR decisioning. These areas directly affect human rights and livelihoods. A lack of explainability in these contexts can lead to accusations of discrimination, unfair treatment, or negligence, amplifying reputational and financial damage.

- Shadow AI adoption: Business units often deploy unreviewed or unexplainable AI tools outside official governance frameworks. These “shadow AI” systems bypass compliance checks, creating blind spots for risk leaders. In 2026, regulators are expected to treat shadow AI as a governance failure, holding enterprises accountable for systems they did not formally approve.

- Audit failures: Algorithmic audits are becoming mandatory across industries. Enterprises that cannot provide model documentation, lineage tracking, or rationale outputs risk failing these audits. Audit failures can block product launches, trigger regulatory penalties, and undermine investor confidence. In regulated sectors, a failed audit may even halt operations until compliance is restored.

- Bias and discrimination risks: Opaque models often conceal systemic bias. Without explainability, enterprises cannot detect or mitigate discriminatory outcomes. This exposes organizations to reputational damage, regulatory fines, and loss of customer trust. In 2026, fairness audits will make bias detection a compliance requirement, not just an ethical aspiration.

- Governance breakdowns: Boards, compliance officers, and regulators cannot oversee systems they do not understand. Lack of explainability undermines governance structures, leaving accountability gaps. This breakdown erodes confidence in enterprise leadership and increases the likelihood of regulatory intervention.

- Operational disruption: When AI decisions cannot be explained, workflows stall. Employees hesitate to act on outputs they cannot justify, leading to delays in finance, HR, and healthcare operations. Opaque systems create bottlenecks that reduce efficiency and increase costs.

What Explainable & Trustworthy AI Actually Requires

Explainable and Trustworthy AI is not a slogan or a distant aspiration. By 2026, it will become the operational backbone of compliance. Regulators, auditors, and customers will no longer accept vague assurances about fairness or safety. Enterprises must demonstrate, with evidence, how their AI systems make decisions, how bias is detected and corrected, and how accountability is enforced.

This means moving beyond abstract principles into concrete practices. Explainability requires technical transparency, governance discipline, and human oversight working together. Trustworthy AI is built on interpretability, fairness, provenance, robustness, and safety guardrails, each one a compliance requirement, not an optional enhancement.

Model interpretability

Enterprises must provide clear insights into how models reach their conclusions. For example, banks now use model cards that show which features most influenced a credit approval or denial. Healthcare providers are piloting diagnostic AI that outputs a rationale alongside predictions, allowing doctors to understand why a patient was flagged for urgent care.

Bias detection and mitigation

Continuous monitoring for bias is essential. Large employers are deploying fairness dashboards to track hiring algorithms, ensuring candidates are not disadvantaged by gender or ethnicity. In insurance, bias testing frameworks are used to confirm pricing models do not unfairly penalize certain demographics.

Data lineage and provenance tracking

Regulators expect enterprises to document where data comes from and how it flows through AI systems. Financial institutions now maintain decision logs that trace every dataset used in credit scoring, making it possible to defend decisions during audits. Hospitals are adopting provenance tracking to ensure patient data used in AI triage is legitimate and compliant with privacy laws.

Robustness, reliability, and drift monitoring

AI systems must remain stable under stress and adaptable to changing data. Retail companies use drift monitoring tools to detect when recommendation engines start producing irrelevant results due to seasonal changes. In healthcare, reliability checks ensure diagnostic models continue to perform accurately as new patient data is introduced.

Human‑in‑the‑loop oversight

Enterprises must embed human oversight into AI workflows. For example, HR departments require human reviewers to validate automated hiring decisions before offers are extended. Hospitals use human‑in‑the‑loop triage, where doctors review AI recommendations before patient prioritization is finalized. This oversight provides accountability regulators demand.

Safety guardrails and red‑team testing

Trustworthy AI requires proactive stress testing. Tech companies now run red‑team exercises to simulate adversarial attacks on chatbots and recommendation systems, identifying vulnerabilities before they cause harm. In finance, safety guardrails are applied to prevent trading algorithms from making extreme, high‑risk moves during volatile markets.

Regulatory Forces Driving the XAI Compliance Era

Explainable and Trustworthy AI is no longer guided only by best practices or voluntary principles. By 2026, it is being hard‑wired into law, standards, and enforcement mechanisms across the globe. Enterprises can no longer rely on internal assurances of fairness or safety. Regulators, auditors, and industry bodies are setting explicit requirements for transparency, accountability, and risk management.

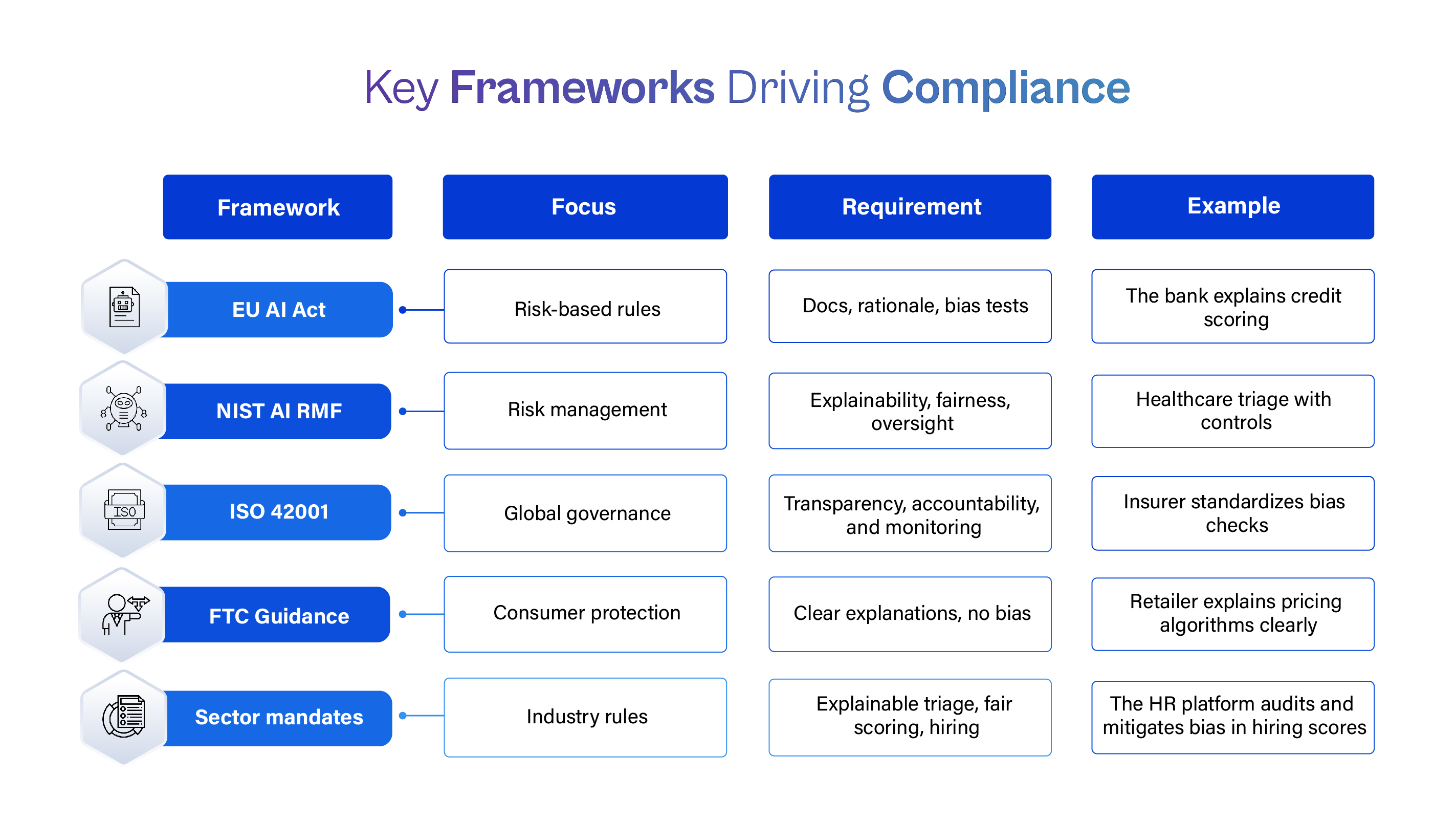

This convergence of frameworks, from the EU AI Act to NIST’s Risk Management Framework, ISO 42001, and FTC guidance, signals a new compliance era. Enterprises must align their AI systems with overlapping mandates that demand explainability, bias mitigation, and human oversight. Failure to comply will not only invite fines and lawsuits but also erode public trust and investor confidence.

Key Frameworks Driving Compliance

EU AI Act

The EU AI Act is the most comprehensive regulatory framework to date. It classifies AI systems by risk and imposes strict transparency and risk‑management mandates on high‑risk applications such as healthcare, finance, HR, and public safety. Enterprises must provide documentation, rationale outputs, and bias testing results to operate legally in the EU. For example, a bank offering credit scoring in Europe will need to show regulators how its model evaluates applicants, including which features drive approvals or denials.

NIST AI Risk Management Framework (RMF)

In the United States, the NIST RMF provides a structured approach to managing AI risks. It emphasizes explainability, fairness, and accountability as core pillars. Enterprises adopting the RMF can demonstrate compliance readiness and reduce exposure to lawsuits and regulatory penalties. For instance, a healthcare provider using AI triage can align with NIST RMF by documenting risk controls, rationale outputs, and human oversight checkpoints.

ISO 42001: AI Management Systems Standard

ISO 42001 establishes global best practices for AI governance. It requires enterprises to implement management systems that ensure transparency, accountability, and continuous monitoring of AI systems. Multinational organizations are already adopting ISO 42001 to harmonize compliance across regions. A global insurer, for example, can use ISO 42001 to standardize bias testing and documentation across its operations in Europe, Asia, and North America.

FTC guidance on automated decision‑making

The U.S. Federal Trade Commission has issued guidance warning enterprises against opaque automated decision‑making. It requires organizations to provide clear explanations to consumers and prohibits discriminatory or deceptive practices. A retailer using AI for dynamic pricing must be able to explain price differences to customers and prove that its algorithms do not discriminate against protected groups.

Sector‑specific mandates

Beyond general frameworks, sector regulators are tightening rules. Healthcare regulators demand explainable triage systems that doctors can validate. Financial regulators require transparency in credit scoring and insurance pricing. Employment regulators mandate fairness in hiring and promotion algorithms. For example, an HR platform offering automated candidate screening must provide bias testing results and rationale outputs to prove compliance with employment law.

The Enterprise XAI Action Plan for 2026

By 2026, enterprises cannot treat explainability as an afterthought. It must be embedded into governance, workflows, and culture. Regulators, auditors, and customers expect evidence that AI systems are transparent, fair, and accountable. This requires a deliberate shift from reactive compliance to proactive governance.

The Enterprise XAI Action Plan provides a structured roadmap for organizations to operationalize explainability. It combines governance discipline, technical transparency, and cultural change. Each step is designed to help enterprises withstand regulatory scrutiny, build customer trust, and ensure that AI systems remain resilient in dynamic environments.

Build an internal AI governance council

Establish a cross‑functional body that includes compliance officers, legal teams, data scientists, and business leaders. This council sets policies, reviews high‑risk AI deployments, and ensures accountability across the enterprise. For example, leading banks have created AI governance boards that meet quarterly to review credit scoring models, bias reports, and audit readiness. These councils also monitor evolving regulations, align enterprise practices with global standards, and proactively address ethical concerns before audits escalate.

Create model cards and decision logs for all AI systems

Document every AI model with its purpose, inputs, outputs, and limitations. Maintain decision logs that record how automated systems reach conclusions. These artifacts are essential for audits, legal defensibility, and stakeholder trust. In healthcare, hospitals are already using model cards to explain diagnostic AI outputs to doctors and regulators, ensuring transparency in patient triage. Enterprises also leverage these artifacts to train staff, demonstrate accountability to investors, and strengthen customer confidence in automated decisions.

Use XAI‑enabled model development tools

Adopt platforms that provide built‑in interpretability, bias detection, and rationale outputs. These tools reduce the burden on developers and ensure compliance is baked into the development lifecycle. Tech companies are increasingly integrating explainability libraries into their machine learning pipelines, allowing developers to generate rationale outputs automatically. Such tools also streamline documentation, simplify regulator engagement, and empower enterprises to scale AI responsibly across diverse business functions worldwide.

Deploy fairness, bias, and interpretability dashboards

Provide executives and auditors with real‑time visibility into model performance. Dashboards highlight bias risks, feature importance, and drift, making oversight proactive rather than reactive. For instance, HR platforms now offer fairness dashboards that show how candidate selection algorithms perform across demographics, helping enterprises prove compliance with employment law. These dashboards also support continuous monitoring, enable corrective interventions, and provide leadership with actionable insights to maintain trust and regulatory alignment.

Implement mandatory review cycles and human oversight policies

Require periodic reviews of all AI systems, especially those in high‑risk domains. Embed human‑in‑the‑loop checkpoints where critical decisions must be validated by human reviewers. In finance, regulators increasingly expect human validation of loan denials, ensuring that customers receive explanations backed by human accountability. Review cycles also uncover hidden risks, strengthen governance practices, and reassure stakeholders that enterprise AI remains transparent, fair, and defensible under scrutiny.

Train non‑technical stakeholders to understand AI rationale

Compliance is not just technical. Legal teams, HR leaders, and customer service managers must be able to interpret AI outputs. Training programs build organizational literacy and ensure accountability across functions. For example, insurers are training claims officers to read and explain AI‑generated risk assessments to customers, bridging the gap between technical outputs and human communication. These programs also foster cross‑functional collaboration, empower employees to challenge bias, and reinforce enterprise culture centered on transparency and fairness.

The Enterprise XAI Action Plan is more than a compliance checklist. It is a blueprint for resilience, trust, and long‑term competitiveness. By embedding governance councils, model documentation, fairness dashboards, and human oversight into daily operations, enterprises can move beyond regulatory survival toward strategic advantage. Organizations that act now will not only meet the demands of auditors and regulators in 2026 but also earn the confidence of customers, investors, and employees. In a market where trust is the new currency, explainability becomes the foundation for sustainable growth.

Conclusion

2026 marks the turning point where explainability and trustworthiness shift from optional enhancements to mandatory requirements. Regulators, auditors, and customers are united in demanding transparency, fairness, and accountability from AI systems. Enterprises that continue to rely on opaque models risk fines, lawsuits, reputational damage, and operational disruption.

The XAI Reckoning is not simply about compliance. It is about building resilience, earning trust, and securing competitive advantage in a market where confidence in AI is fragile. Organizations that embed explainability into governance, workflows, and culture will not only survive regulatory scrutiny but also thrive by differentiating themselves as trustworthy leaders.

The path forward is clear: enterprises must act now to operationalize explainability, align with global frameworks, and prove that their AI systems are transparent, fair, and defensible. In 2026, trust becomes the new currency of enterprise success, and explainable AI is the foundation on which it is built.

As we approach 2026, explainable and trustworthy AI is no longer optional, it's a regulatory requirement. Cogent Infotech can help your organization navigate the evolving landscape of AI transparency, ensuring your systems are compliant, fair, and auditable. From model documentation to bias testing and governance frameworks, we provide the expertise and tools to operationalize explainability across your enterprise.

Contact Cogent Infotech today to build trust and resilience into your AI systems for 2026 and beyond.

%402x.svg)