The Shadow Productivity Economy: When Employees Use AI in Secret

Introduction

A quiet revolution is underway in workplaces globally, as many employees secretly use artificial intelligence tools to ease their workloads, meet deadlines, and improve their work quality. This phenomenon, known as the Shadow Productivity Economy, involves the use of unapproved AI applications outside official company policies. Workers use generative AI for tasks like drafting memos, summarizing reports, and coding, often without their managers knowing. Surveys show that between 30% and 57% of workers hide their AI use, mainly because organizations lack clear guidance, approved tools, or proper training. Many do this out of necessity rather than intent to deceive.

This situation creates a paradox: employees see AI as essential for productivity, while leaders worry about risks such as compliance issues, data leaks, and reputational harm. Some companies respond by installing surveillance software to monitor AI use, but this often backfires, damaging trust and pushing AI activities deeper into secrecy. The Shadow Productivity Economy highlights gaps in governance and unclear policies but also signals a huge opportunity. If companies can establish safe frameworks and clear guidelines, they could harness this hidden energy to boost innovation while managing risks effectively.

The Rise of Shadow AI

Concealment and Fear

A recent KPMG study shared by Business Insider found that 57% of workers worldwide admit to submitting AI-generated work as their own without telling their managers, and 30% actively hide their AI use. This shows that AI use at work is common, but many feel the need to keep it secret. The main reason is a lack of clear guidance, less than half have received formal training on using AI responsibly. So, employees use AI where they feel safe and stay quiet where they don’t. Many also worry their bosses might see AI use as cheating or think they’re less skilled, which makes them even more secretive, creating a cycle where transparency is lost and responsible AI use goes unrecognized.

Shadow AI by the Numbers

A report from Software AG’s newscenter shows that the use of unapproved AI tools is far more common than many might think, with about half of all workers admitting to using shadow AI. Among software developers, this number is even higher, with more than 50% incorporating these tools into their daily workflows. This widespread use highlights that shadow AI isn’t just the work of a few individuals but is deeply embedded across different roles and departments within organizations.

This secret use often happens in companies that haven’t yet rolled out official AI platforms. Employees turn to free or low-cost generative AI tools because they are quick, easy to use, and help meet tight deadlines in fast-moving work environments. While these tools boost productivity, bypassing IT oversight creates risks, like security breaches, compliance problems, and potential leaks of sensitive information, that organizations may not be fully prepared to handle.

The Emotional Drivers - Insights by Ivanti

The secrecy around AI use isn’t just about convenience, it’s deeply tied to fear. Ivanti’s research highlights that 32% of employees purposely hide their AI use because they worry managers might penalize them or see them as replaceable, while about 30% fear losing their jobs if AI becomes more common. This shows that shadow AI isn’t just a tech issue but a human one, where employees struggle to balance using helpful tools and fitting into unclear organizational expectations. The fear of judgment combined with a lack of clear guidance creates stress that pushes many to keep their AI use secret.

This hidden fear also impacts teamwork and knowledge sharing, as people avoid openly talking about AI or sharing best practices, which slows down innovation. Managers are left in the dark, unable to support their teams or properly invest in AI resources. To change this, organizations need to understand and address these emotional concerns so they can turn secretive AI use into a transparent, well-governed approach that builds trust, boosts morale, and maximizes productivity.

A Shadow Market in the Making

These surveys reveal a clear trend: nearly half of the workforce is engaged in a hidden AI economy that operates outside formal policies but delivers real results. Employees are saving time, generating ideas, drafting content, and analyzing data to boost productivity. This underground use thrives because organizational structures and official tools haven’t kept pace with how quickly AI has been adopted on the ground.

This hidden AI activity also points to a larger cultural disconnect. Workers are eager to use innovative tools that help them, even without formal approval. The real challenge for leaders is to see this not as a threat but as a valuable opportunity. Companies must decide whether to continue trying to suppress this underground economy through bans and monitoring or to embrace it, create clear guidelines, and turn it into a strategic advantage that drives measurable business success.

The Monitoring Trap: Why Bossware Fuels the Shadow Economy

When organizations feel uneasy about shadow AI, many turn to digital monitoring tools, often called bossware, to keep an eye on employees. These tools track everything from login times and browsing habits to keystrokes and even screen activity or emails. The goal is to give managers peace of mind that work is being done properly and with the right tools.

But in reality, this kind of surveillance often backfires. As IT Pro points out, employees quickly notice they’re being watched, which can kill trust and lower morale. Instead of encouraging honesty, it drives workers to hide what they’re really doing, staying logged in just for show, using personal devices to explore AI, or finding ways to avoid detection. This only fuels a cycle where tighter monitoring leads to more secrecy, which then demands even more monitoring. To truly address shadow AI, companies need to focus on clear governance, proper training, and building trust, not just surveillance.

Surveillance & Action

According to IT Pro, many companies are now using monitoring software to keep an eye on remote and hybrid work. Some tools even try to spot whether AI was used to write text, generate code, or create designs, especially if those tools haven’t been officially approved.

But for employees, this kind of tracking can feel invasive. Instead of building trust, it often leads to quiet resistance. When people feel watched, they’re less likely to be open about how they work, and more likely to hide their use of helpful tools. In the end, too much surveillance doesn’t create clarity. It creates secrecy.

The Morale Erosion Cycle

Heavy monitoring can take a toll on workplace morale. While employees might follow the rules on the surface, they often feel less connected and less motivated. When people feel constantly watched, it chips away at their sense of autonomy, and that can lead to lower job satisfaction and even higher turnover.

In the case of shadow AI, this kind of surveillance can actually make things worse. The more employees feel scrutinized, the more likely they are to sidestep official tools and policies. Instead of curbing unsanctioned AI use, bossware may push it further underground, onto personal devices or into off-the-record workflows.

Fuel on the Fire

Strict monitoring doesn’t always reduce shadow AI use, it can unintentionally encourage it. When employees feel closely watched, it signals mistrust and discourages openness. Instead of asking questions or sharing how they use AI, they may turn to personal devices, unapproved tools, or private channels to get work done. This kind of workaround behavior can lead to fragmented workflows, less collaboration, and missed opportunities to learn from each other.

Organizations often find themselves in a loop: they introduce monitoring to control AI use, but the monitoring itself drives more secrecy. The more intrusive the oversight, the more employees retreat into off-the-record practices, prompting even stricter controls. Breaking that cycle means shifting the focus from enforcement to education, trust-building, and clear governance. When employees feel supported, they’re far more likely to use AI in ways that are safe, productive, and easy to measure.

The Risk Landscape: Heat-Map and Redaction Patterns

The Shadow Productivity Economy isn’t driven by bad intentions, but it does come with real risks. Many employees simply aren’t aware of what’s considered sensitive, and without clear guidance, they might accidentally share confidential information with public AI tools.

That’s why it helps to offer a simple, visual guide, a kind of risk heat-map. It can show what types of data are safe to use with public AI platforms, what should be redacted, and what should never be shared. When people know where the boundaries are, they’re much more likely to stay within them.

- Placeholder Substitution: Replace names, project codes, customer identifiers, or other sensitive details with generic placeholders. This allows the AI to process content without exposing confidential or proprietary information, while still preserving the context needed for meaningful outputs.

- Chunking: Share only the relevant excerpt, not the entire dataset. Breaking information into smaller, context-specific segments minimizes the risk of accidental disclosure while still allowing the AI to analyze or summarize content effectively.

- Anonymization: Strip out sensitive identifiers before submission, including client names, internal project codes, financial figures, or personal details. Advanced anonymization may also involve replacing identifiable patterns with randomized or generic data points.

When redaction techniques are built into everyday workflows, it becomes much easier to use AI tools safely. Employees can still tap into the speed and creativity of generative AI: for writing, analysis, brainstorming, and more, without putting sensitive data at risk. It’s a practical way to support productivity and collaboration while staying aligned with security and compliance goals. With the right guardrails in place, teams can work smarter and faster, with confidence.

From Secret Use to Structured Productivity: Behavioral Change

Policies are a good starting point, but they’re not enough on their own. For AI to be used openly and responsibly, employees need to feel safe, supported, and motivated. That kind of shift doesn’t come from strict rules or fear of getting it wrong, t comes from building trust, offering clear guidance, and creating a shared sense of responsibility. When people feel confident and included, they’re far more likely to use AI in ways that benefit everyone.

- Acknowledge Reality: Leaders should recognize that employees are already using AI. Framing policies as support rather than punishment reduces fear and signals that innovation is valued, not penalized. By acknowledging existing usage, organizations can guide behavior constructively, fostering trust and encouraging employees to explore AI tools with confidence and accountability.

- Psychological Safety: Create sandbox environments where employees can experiment openly without risk of sanction. These safe zones allow learning without fear of error, gradually normalizing responsible use. When employees feel psychologically safe, they’re more likely to share insights, ask questions, and collaborate, accelerating collective learning and building a culture of ethical innovation.

- Role-Based Training: Offer tailored AI training per role, marketers, developers, HR specialists, so each knows what’s safe and effective. This ensures governance feels enabling rather than restrictive. Customized training empowers employees to use AI confidently within their domain, aligning tools with job-specific goals and reducing misuse through clarity, relevance, and practical, role-driven guidance.

- Positive Incentives: Celebrate teams that use AI responsibly, highlighting productivity gains achieved within governance. Recognition reinforces desired behaviors far more effectively than monitoring alone. Publicly sharing success stories, offering rewards, or showcasing innovative use cases builds momentum, motivates others to follow suit, and positions responsible AI adoption as a source of pride.

- Progressive Governance: Use tiered permissions instead of blanket bans, allowing flexibility while enforcing boundaries. This approach accommodates varying levels of expertise and risk, enabling experimentation where appropriate while protecting sensitive areas. Progressive governance fosters innovation by balancing freedom and control, ensuring AI use evolves safely without stifling creativity or agility.

Behavioral science shows that employees comply when they view policies as fair and reversible. The key is alignment of trust, training, and incentives, transforming secrecy into collaboration and shadow productivity into transparent, governed innovation. When policies feel participatory, not imposed, employees engage more deeply, fostering a culture of openness, adaptability, and shared accountability.

Bringing Shadow AI Into the Open: A Supportive Framework for BYO-AI

Employees are already using AI to get work done, and that’s not a bad thing. The goal isn’t to shut it down, but to guide it. With the right policies in place, organizations can turn quiet experimentation into safe, structured innovation. A Bring Your Own AI (BYO-AI) governance program helps teams feel supported, not restricted, and ensures AI use aligns with company values and compliance needs.

Core Elements of a BYO-AI Governance Program

- Scope: Define who is covered: employees, contractors, vendors, and clarify whether the policy applies across devices, including personal laptops or mobile phones used for work.

- Definitions: Clarify “approved AI” vs. “shadow AI,” ensuring workers understand that using public tools without clearance introduces both compliance and security risks.

- Role-Based Permissions: Assign tiers of access (e.g., Tier 1: summarization only, Tier 2: drafting, Tier 3: coding) so privileges scale with training, responsibility, and proven safe practices.

- Vendor Whitelist: Maintain a list of approved AI tools and regularly update it as new, secure platforms are vetted, reducing the temptation for employees to rely on risky alternatives.

- Data Classification: Spell out what data types can/cannot be shared with AI, linking back to organizational confidentiality levels and regulatory requirements.

- Redaction Guidance: Provide specific patterns for removing sensitive information before submission: placeholders, anonymization, or truncation examples, so safe use becomes intuitive.

- Oversight: Define who audits usage and how often, balancing control with a culture of trust. Transparency in oversight helps avoid the secrecy that fuels shadow AI.

- Sanctions + Support: Outline consequences of misuse but balance them with remediation and additional training, signaling that the priority is learning and improvement, not punishment.

- Feedback Loop: Allow employees to request new tools or exceptions, encouraging collaboration rather than workarounds and ensuring governance evolves alongside real-world needs.

Such a policy shifts AI from being a forbidden shortcut to a structured and officially recognized asset, turning underground behaviors into visible, governable products.

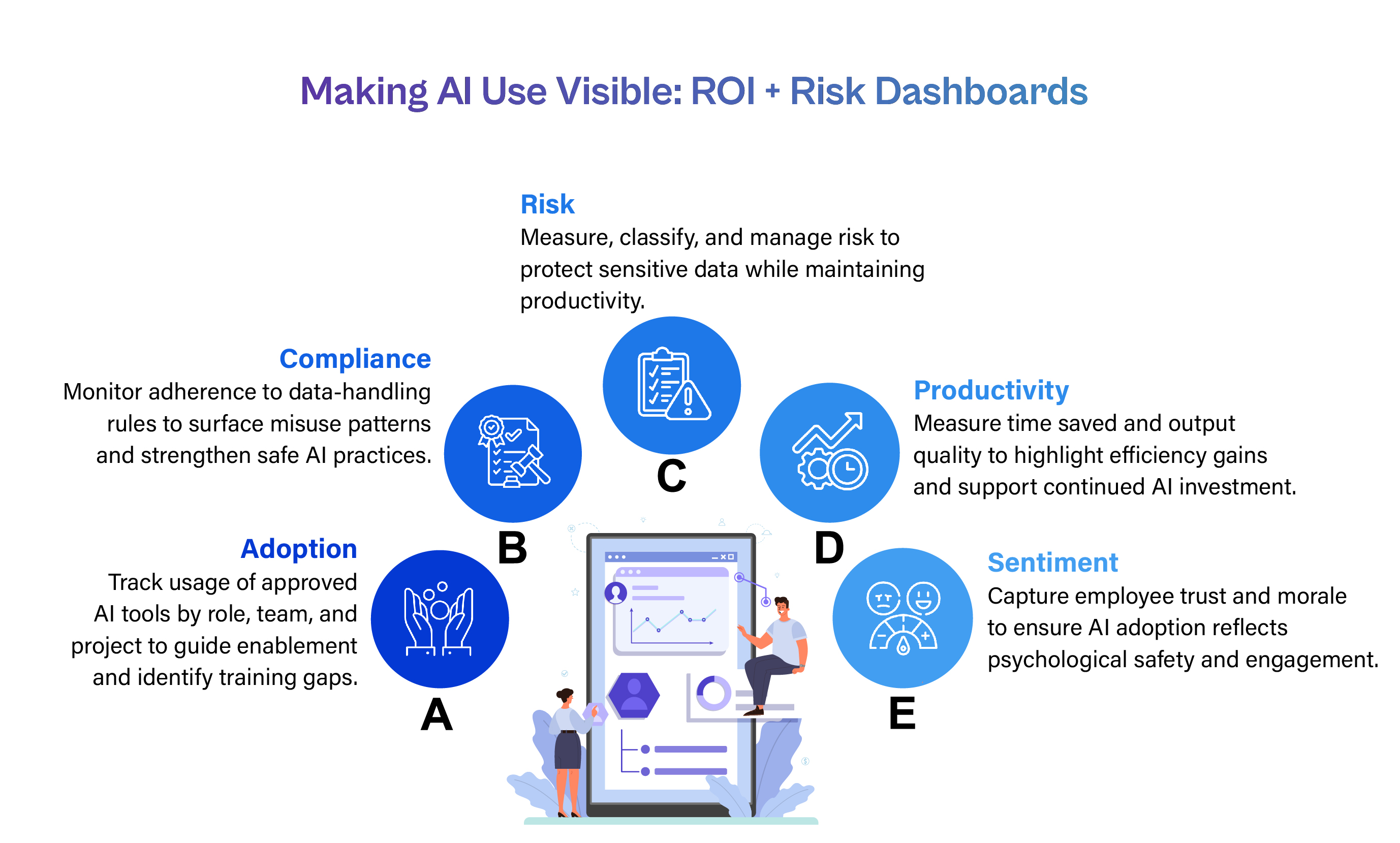

Making AI Use Visible: ROI + Risk Dashboards

Even the most thoughtful AI policy won’t go far without visibility. To truly understand how AI is being used across the organization, it helps to build dashboards that bring everything together, productivity data, compliance checks, and even employee feedback.

These dashboards aren’t just for tracking numbers. They offer a fuller picture of how AI is shaping work, what tools are being used, how teams are engaging with them, and where support or guardrails might be needed. By combining data from approved tool logs, policy audits, and sentiment surveys, leaders can see both the measurable impact and the cultural ripple effects.

It’s a practical way to guide decisions, spot trends, and ensure AI adoption stays aligned with company values. When visibility improves, so does trust, and that’s what turns quiet experimentation into confident, responsible innovation.

Metrics to Track

Adoption

Measure the percentage of employees using approved AI tools, broken down by department, role, or project type. Tracking adoption trends over time helps identify pockets of high engagement and areas where additional training or governance may be required. These insights can guide targeted enablement efforts, ensuring AI support reaches the teams that need it most and helping leaders understand where adoption is thriving or lagging.

Compliance

Track the percentage of inputs meeting redaction and data-handling requirements. Highlighting patterns of misuse, near-misses, or repeated violations enables proactive interventions and continuous improvement of policies. Dashboards can surface common mistakes, flag areas where guidance is unclear, and help teams course-correct early, before small errors become larger risks. Over time, this builds a stronger culture of safe, confident AI use.

Risk

Quantify the number and severity of policy violations, including exposure of sensitive data, unapproved tool usage, or unauthorized sharing. Classifying risk by severity helps prioritize remediation efforts and informs leadership decision-making. Dashboards can also show which roles or workflows carry higher risk, allowing for smarter governance and tailored safeguards that protect sensitive information without slowing down productivity.

Productivity

Estimate time saved, improvements in output quality, and reductions in repetitive tasks attributable to AI usage. Combining these measures with employee feedback provides insights into the true efficiency gains realized by AI adoption. Dashboards can highlight where AI is freeing up time for strategic work, improving accuracy, or helping teams move faster, making the case for continued investment and thoughtful expansion.

Sentimen

Incorporate surveys and qualitative assessments of employee trust, morale, and comfort with AI use. Understanding the human side of AI adoption ensures dashboards reflect not only output but also engagement and psychological safety. When employees feel supported and heard, they’re more likely to use AI tools responsibly and creatively. Sentiment data helps leaders spot concerns early and build a culture of openness and trust.

Dashboards help organizations see where AI is driving results and where support is needed. By connecting usage data with business outcomes and employee feedback, leaders can track ROI, spot risks, and recognize responsible AI use. It’s a smart way to turn scattered data into clear, actionable insights.

Conclusion: Turning Quiet AI Use Into Smart Strategy

Shadow AI isn’t a fringe issue, it’s already part of how many employees work. Studies show that nearly half the workforce is using AI tools quietly, often without guidance. And while monitoring or bans might seem like a fix, they often push this behavior further underground, creating more confusion and less trust.

The better path is to bring shadow AI into the open. With clear BYO-AI policies, role-based training, smart redaction practices, and dashboards that track both impact and risk, organizations can turn quiet experimentation into safe, supported innovation. These steps don’t just reduce risk, they build trust, boost morale, and help teams use AI with confidence.

Ultimately, shadow AI isn’t just a challenge, it’s a signal. It shows where employees are trying to work smarter, faster, and more creatively. By embracing it thoughtfully, leaders can shift from chasing hidden activity to shaping a workplace where people and technology grow together with clarity, accountability, and shared success.

Ready to Turn Shadow AI Into a Strategic Advantage?

At Cogent Infotech, we help organizations move beyond hidden AI usage, unlocking safe, transparent, and high-impact innovation through responsible AI frameworks, training, and governance.

Let’s transform quiet experimentation into measurable success.

Whether you’re seeking custom AI policy design, role-based enablement, or productivity insights through AI dashboards, we’re here to guide your journey.

Book a consultation with Cogent Infotech and build an AI-ready workforce rooted in trust, compliance, and innovation.

Start the conversation.

%402x.svg)