The Rise of XAI: How Explainable AI is Becoming a Mandate, Not a Feature

Introduction

Artificial intelligence has rapidly become an essential part of modern business infrastructure, shaping decision-making processes that impact customer experiences, organisational performance, and regulatory outcomes. Its presence now extends across high-stakes functions such as financial approvals, talent evaluation, healthcare diagnostics, and risk classification.

With this growing influence comes heightened scrutiny. Businesses, regulators, and users alike are seeking greater visibility into how AI systems arrive at their conclusions. This growing demand for understanding has placed explainability at the centre of responsible AI deployment.

Stakeholders are no longer satisfied with AI systems that only deliver speed and accuracy. There is now a clear expectation for openness, responsibility, and visibility into the reasoning behind automated decisions. Stakeholders no longer evaluate AI systems solely on accuracy or efficiency; they now demand transparency, accountability, and the ability to understand how decisions are made.

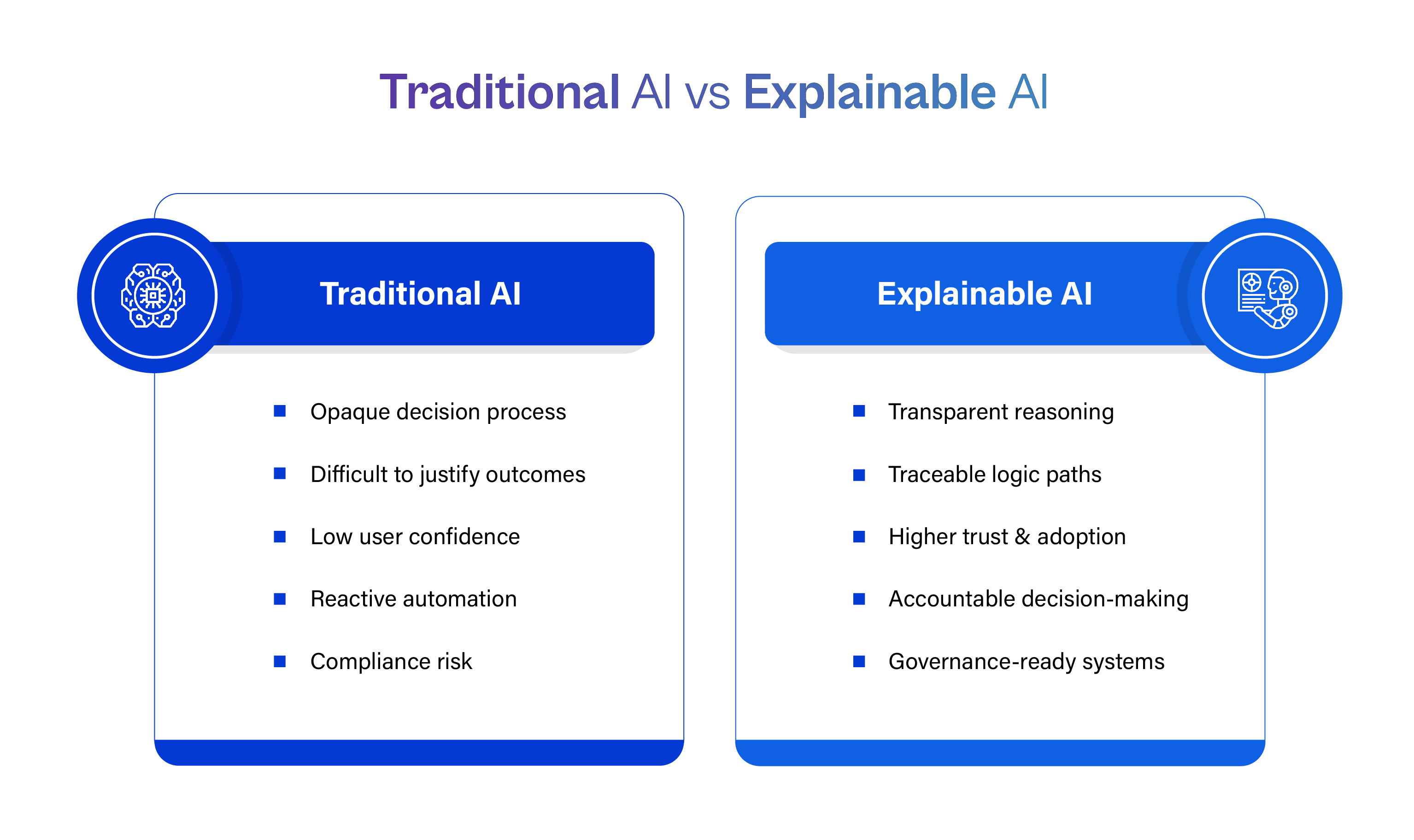

This evolution has brought Explainable Artificial Intelligence (XAI) into sharp focus. The increasing complexity of modern AI models has made their internal logic difficult to interpret, creating challenges around trust, risk, fairness, and regulatory compliance. In response, global governance bodies have formalised transparency as a standard requirement.

Regulations and governance frameworks now position explainability as a defining characteristic of trustworthy AI, rather than an optional enhancement. Public sentiment further reinforces this shift. Consumer trust in AI-generated outputs remains fragile, particularly in automated judgment and content summarization. Market research indicates that a significant portion of users remains sceptical of AI-driven results, primarily due to a lack of clarity about how those results are produced. This erosion of confidence has made explainability essential not just for compliance, but for sustainable adoption and long-term business credibility.

This blog explores how explainable AI has evolved from a technical add-on into an operational necessity. It examines the forces driving this transformation, the technologies that enable XAI, the sectors where explainability is already indispensable, and the strategic frameworks organisations must adopt to embed transparency at scale.

Why Explainable AI Is No Longer Optional

The black-box dilemma

Highly advanced AI systems often rely on complex architectures capable of processing massive volumes of data and identifying patterns with remarkable precision. However, the very complexity that enables their power also obscures their decision-making pathways. When organisations deploy systems they cannot interpret, they introduce systemic vulnerability.

From a regulatory standpoint, opaque systems increase the risk of non-compliance, particularly in sectors subject to fairness and accountability requirements. Legally, they limit an organisation's ability to defend automated decisions. Operationally, they reduce internal confidence and slow response to anomalies or model failures. Reputationally, unexplained AI outcomes erode customer trust and weaken brand credibility

Explainability, therefore, acts as a stabilising mechanism. It converts AI from a mysterious authority into a transparent tool that supports informed human oversight. This ability to trace, justify, and validate decisions underpins responsible deployment.

The link between trust and performance

Explainability plays a direct role in adoption, usability, and organisational performance. When users understand the logic behind AI recommendations, they engage with systems more confidently and provide meaningful feedback. This feedback loop improves model refinement and accelerates performance optimisation.

Conversely, a lack of transparency increases resistance. Research on consumer attitudes shows that unclear or unpredictable AI systems reduce confidence and increase perceived risk, particularly in high-impact scenarios. Organisations that invest in interpretability therefore not only reduce operational risk but also strengthen user confidence, driving higher ROI and smoother integration into business workflows.

Regulatory Forces Turning XAI Into a Mandate

EU AI Act: Transparency as legal infrastructure

The European Union AI Act represents the most comprehensive regulatory framework for artificial intelligence to date. It classifies AI systems based on risk and imposes strict obligations on those categorised as high-risk, including systems used in recruitment, healthcare, finance, law enforcement, and social services.

Under this framework, AI systems must be developed so that human users can appropriately interpret their outputs. Organisations are required to document how systems operate, clarify limitations and risks, and ensure that decisions can be reviewed and audited. These requirements institutionalise explainability as a core compliance function, not a design choice

This legal model establishes a precedent that is influencing regulatory approaches worldwide, reinforcing explainability as a universal expectation in high-impact AI systems.

NIST's framework as a global benchmark

Complementing regulatory mandates, the NIST AI Risk Management Framework provides structured guidance for building trustworthy AI. It identifies explainability as a critical characteristic and embeds it across governance, lifecycle management, performance evaluation, and operational monitoring.

By encouraging organisations to define transparency standards tailored to different stakeholders, such as users, auditors, and policymakers, the framework normalises explainability as an integral component of AI maturity and risk management.

The Foundations of Explainable AI

What explainability means in real-world AI

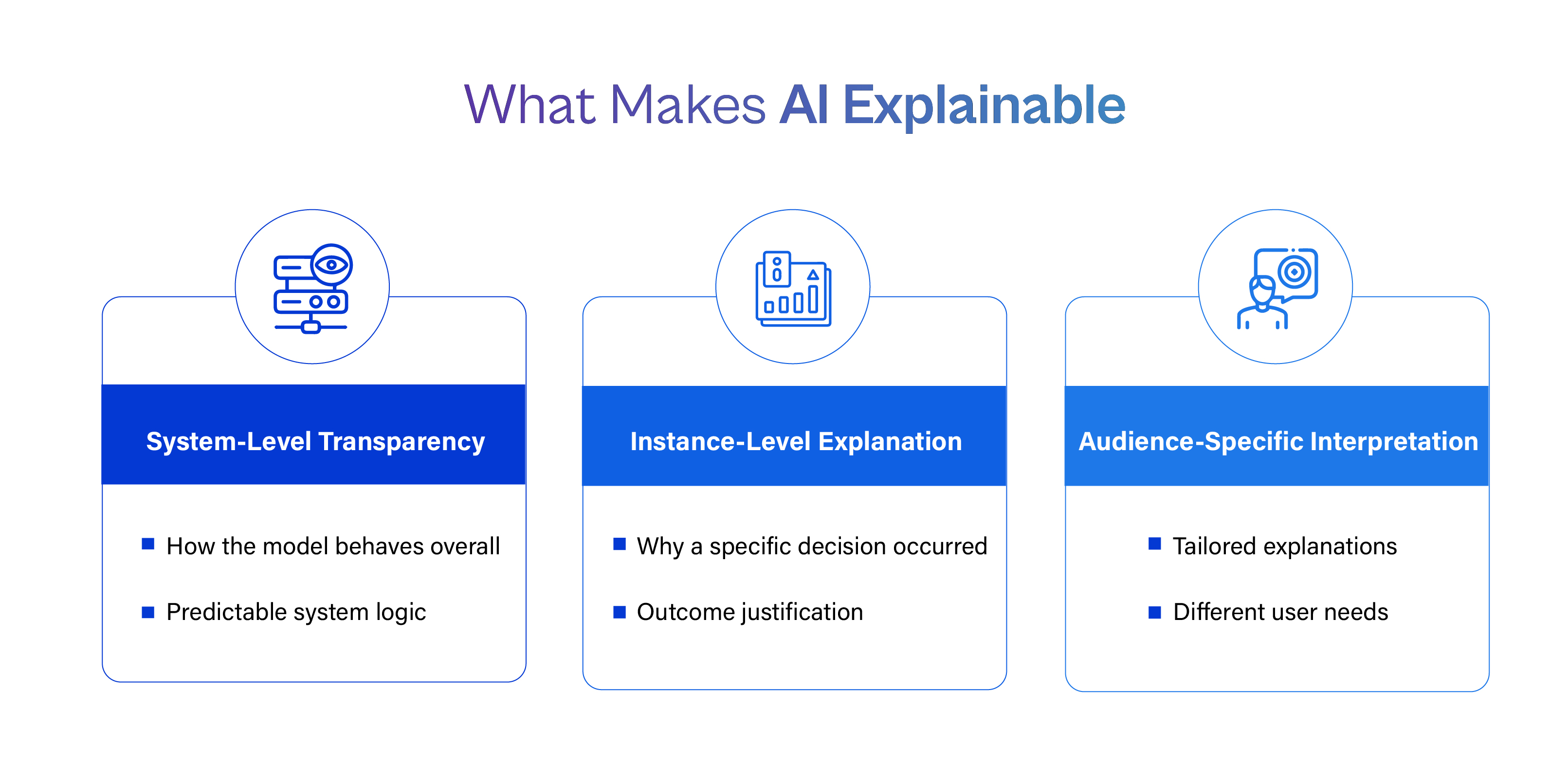

Explainable AI encompasses a range of approaches designed to clarify how models generate outputs. At its core, it seeks to bridge the gap between technical processing and human understanding. Explainability can be embedded directly into system design or applied through interpretative layers after training.

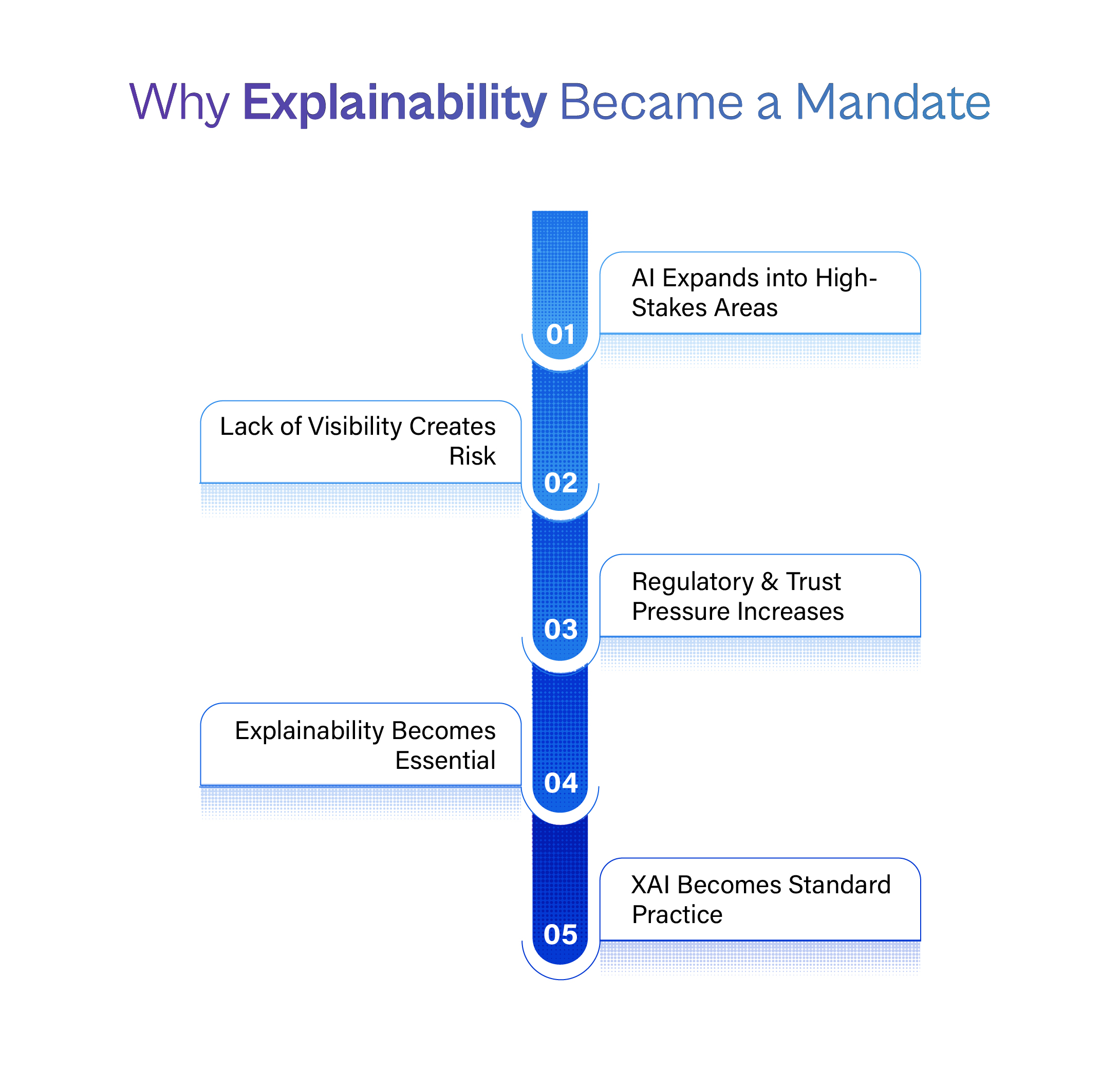

Three dimensions define explainability in practice:

- System-level transparency: Understanding how a model behaves overall

- Instance-level explanation: Understanding why a specific decision was made

- Audience-specific interpretation: Tailoring explanations to different user needs

This flexibility allows organisations to apply interpretability appropriately across technical, operational, and executive contexts.

Techniques enabling XAI

Several methods help decode complex model behaviour, allowing organisations to transform opaque AI systems into transparent, auditable, and trustworthy tools. These techniques do not merely describe what a model predicted, but illuminate why it produced that outcome and how stakeholders should interpret its logic.

- Feature importance analysis identifies which variables most strongly influence a model's predictions. This method assigns relative weight to inputs, showing how strongly each factor contributed to a particular outcome. For example, in credit scoring, it can reveal whether income level, repayment history, or existing liabilities played the dominant role in approving or rejecting an application. This helps business users verify that decisions align with policy intent and ethical guidelines, while also enabling teams to detect unintended bias or over-reliance on specific variables.

- Counterfactual explanations answer an efficient question: "What would need to change for the outcome to be different?" These explanations highlight minimal adjustments that could reverse a decision. For instance, a counterfactual might demonstrate that a loan would have been approved if the applicant's credit utilisation were reduced by 5%. This approach is particularly valuable because it is intuitive, actionable, and user-centric, making it easier for individuals to understand what factors they can practically influence.

- Surrogate models simplify complexity by creating an interpretable model that mimics the behaviour of a more intricate one. While the original model remains unchanged, the surrogate acts as a transparent proxy that explains localised behaviour in an understandable, rule-based, or tree-structured form. This enables explainability in complex systems such as deep neural networks without compromising operational performance.

- Example-based explanations contextualise decisions by referencing similar historical cases. Rather than presenting abstract numbers, the system demonstrates reasoning through concrete comparisons. For example, an AI system evaluating insurance claims may show how similar profiles were assessed in past scenarios, reinforcing transparency through practical illustration and comparative logic.

- Concept-based mapping links predictions to high-level, human-understandable concepts rather than isolated technical variables. Instead of explaining a medical diagnosis in terms of raw pixel values or numeric datasets, the model aligns its reasoning with recognisable clinical attributes, such as tissue density or structural irregularity. This allows domain specialists to better engage with AI outputs using terminology that reflects their professional language and reasoning.

In practice, effective explainability rarely relies on a single method. High-performing organisations integrate multiple techniques to provide layered explanations — combining statistical insight with narrative clarity. This hybrid approach ensures reliability, accessibility, and contextual depth, allowing AI decisions to remain both accurate and comprehensible without diluting technical integrity.

Where Explainability Is Already Essential

Financial services

AI in financial services underpins credit scoring, risk modelling, fraud detection, and automated underwriting. Decisions directly impact access to capital and financial stability, making transparency a necessity. Clear explainability supports regulatory compliance, promotes ethical lending practices, and enhances customer trust in automated approvals and rejections (European Parliament, 2025).

Healthcare

In healthcare, AI assists clinicians by generating diagnostic insights and treatment recommendations. However, medical professionals must understand and validate these suggestions before making life-critical decisions. Explainability enables clinicians to evaluate confidence levels and apply professional judgment in a controlled and ethical manner.

Human resources

Recruitment and performance assessment tools influence hiring, promotions, and career paths. Lacking interpretability, algorithms risk perpetuating bias and discrimination. Transparent systems ensure accountability and preserve organisational equity in workforce decision-making.

Public sector applications

AI systems used in governance influence eligibility assessments, welfare distribution, and public service delivery. Explainability ensures democratic accountability and supports citizen trust in automated decision systems.

Explainability as a Core Governance Mechanism

Modern AI governance increasingly treats explainability as a central component of risk oversight. Mature governance models include structured policies that define when and how explanations must be produced, continuous monitoring to ensure decision consistency, and documentation frameworks that support auditing and regulatory inspections.

High-performing organisations embed explainability into every phase of AI deployment — from data sourcing and model training to production monitoring and post-deployment review. This integration transforms XAI into an institutional safeguard rather than a technical feature.

Building a Scalable XAI Strategy

A scalable approach to explainable AI requires deliberate planning, cross-functional alignment, and integration into enterprise AI architecture. Rather than treating explainability as an add-on, organisations must embed it into their operational, governance, and technical frameworks to ensure consistency, compliance readiness, and long-term reliability as AI adoption expands across functions and geographies.

Core elements of a scalable XAI strategy include:

- Risk-based classification of AI systems

Organisations must first categorise AI use cases based on their level of impact. Systems influencing financial access, employment, healthcare, or legal outcomes require significantly stronger explainability standards than internal optimisation tools. This prioritisation helps allocate resources effectively and ensures regulatory alignment.

- Model selection aligned with interpretability needs

Rather than defaulting to the most complex model, organisations should evaluate whether simpler, more interpretable architectures can meet performance goals. Where high-complexity models are necessary, robust post-hoc explanation mechanisms should be pre-defined as part of system design.

- Standardised explanation frameworks

Consistency in explanation format across teams improves clarity and auditability. Organisations should establish standard explanation templates such as feature breakdowns, confidence scores, and decision narratives that can be adapted for different audiences, including executives, operations, and regulators.

- Integration into MLOps and AI pipelines

Explainability must become a built-in component of AI workflows. This includes automated generation of explanations, logging for audit purposes, version tracking, and continuous monitoring of explanation consistency. Treating explanations as first-class outputs ensures they scale alongside predictions.

- Explainability performance monitoring

Just as models are evaluated for accuracy and bias, explanations must be assessed for quality, relevance, consistency, and usefulness. Tracking shifts in explanation behaviour can also act as an early warning system for model drift or data imbalance.

- Workforce enablement and training

A scalable strategy requires stakeholders to understand how to interpret and assess AI explanations. Training programs should cover reading explanation outputs, recognising red flags, and knowing when to escalate concerns. This empowers users to act as informed overseers rather than passive recipients of AI outputs.

- Governance and documentation structures

Clear documentation ensures traceability and audit readiness. Policies should define when explanations are mandatory, how they must be stored, and who is responsible for review. This formalises explainability as an enterprise discipline rather than an optional design consideration.

Strategic Benefits Beyond Compliance

While regulations drive much of the momentum behind explainability, forward-thinking organisations recognise its broader value. Explainable AI accelerates problem resolution, simplifies model debugging, enhances stakeholder confidence, and improves decision quality across departments.

Transparent systems strengthen brand integrity, reduce crisis response time, and increase consumer loyalty. Additionally, internal teams operate more efficiently when they can clearly interpret model reasoning, enabling faster innovation cycles and better cross-functional collaboration.

The Economic and Operational Impact of Explainable AI

Explainable AI not only reduces regulatory risk and enhances trust, but it also creates measurable economic and operational value. When AI systems are interpretable, organisations can optimise performance faster, allocate resources more efficiently, and reduce costly errors.

From an operational perspective, explainability lowers the "diagnostic cost" of AI. When a model behaves unexpectedly, transparent decision logic allows teams to isolate faulty assumptions, biased inputs, or performance anomalies much faster than in opaque systems. This reduces downtime, limits financial losses, and accelerates iterative improvement.

From a strategic standpoint, explainability enhances decision quality across the enterprise. Business leaders can more confidently align AI recommendations with strategic objectives when they understand the reasoning behind them. This leads to better deployment decisions, improved forecasting accuracy, and more informed risk assessment.

Industries that rely on real-time automated decision-making, such as fintech, logistics, insurance, and e-commerce, particularly benefit from explainability as it enables continuous optimisation without sacrificing oversight. The result is a balance between automation speed and human control, which is critical for long-term scalability.

Explainability in the Age of Generative AI

The rise of generative AI has further intensified the need for explainability. Large language models and generative systems operate on complex probabilistic patterns, producing outputs that appear human-like but are not inherently interpretable. This has raised concerns around misinformation, hallucinations, content manipulation, and decision opacity.

In enterprise contexts, generative AI is increasingly used for contract analysis, customer interaction, strategic planning support, and knowledge management. Without explainability, organisations risk deploying systems that cannot justify their outputs, making governance and accountability extremely difficult.

Explainability mechanisms in generative AI are evolving to include:

- Traceability of source inputs and data lineage

- Justification layers that explain response composition

- Confidence scoring for output reliability

- Decision logging for auditability

These measures are essential for ensuring generative AI remains aligned with organisational values, legal requirements, and ethical boundaries. As generative systems become more widespread, explainability will define whether these tools are perceived as trustworthy instruments or unpredictable liabilities.

Explainable AI and Ethical Responsibility

Beyond regulation and operational efficiency, explainable AI holds profound ethical implications. Algorithmic decisions can reinforce existing biases if left unchecked, particularly when sensitive attributes such as gender, ethnicity, socioeconomic status, or geographic location influence outcomes implicitly.

Explainability allows organisations to reveal hidden bias pathways and evaluate whether model decisions align with ethical commitments and societal expectations. Transparency empowers organisations to demonstrate accountability and justify how safeguards against discrimination are implemented.

Ethical explainability also supports procedural fairness. When individuals understand why a decision was made and how it can be challenged or corrected, they are more likely to perceive systems as fair, even if the outcome is unfavourable. This principle plays a critical role in customer relations, employee engagement, and public trust.

In this respect, explainability is not simply a technical practice but a moral imperative that supports inclusive, equitable, and humane deployment of artificial intelligence.

Human-Centric Design and Explainability

A critical aspect of successful XAI implementation is aligning explanations with human cognition. Simply making a model transparent does not guarantee understanding. Explanations must be designed with psychological and behavioural considerations in mind.

Effective explainability integrates the following principles:

- Clarity: Avoiding unnecessary technical jargon

- Relevance: Highlighting only the most impactful factors

- Context: Framing explanations within domain-specific logic

- Consistency: Maintaining predictable explanation formats

- Actionability: Allowing users to respond or adapt

User experience design, therefore, plays a significant role in how explanations are perceived and utilised. A technically accurate explanation that is poorly presented can undermine trust as easily as no explanation at all.

Human-centric XAI ensures decisions are not only transparent but also meaningful to the people who rely on them.

The Future Trajectory of Explainable AI

Explainable AI is evolving alongside advancements in AI capabilities. Future developments are expected to focus on adaptive explanations that dynamically adjust based on user role, context, and decision impact. These systems will offer layered explanations from high-level summaries to deep technical insights within a unified framework.

Integration with real-time AI monitoring systems will also become standard practice, enabling continuous interpretability assessment and proactive risk detection. This will allow organisations to address issues before they escalate into compliance crises or reputational damage.

In parallel, regulatory expectations will continue to tighten. As more jurisdictions adopt risk-based AI regulations, explainability will become universally codified. Compliance will not only require proof of performance but also proof of understanding.

This evolution positions explainable AI not as a static requirement, but as a continuously evolving discipline central to responsible innovation.

Conclusion

Explainable AI has matured into a foundational requirement for any organisation deploying artificial intelligence at scale. As regulatory frameworks strengthen and public scrutiny intensifies, the ability to understand, justify, and govern AI decisions is essential.

Across industries, explainability safeguards organisations against systemic risk, enhances decision integrity, and strengthens trust among users, regulators, and stakeholders. It transforms AI from an opaque automation tool into a transparent, accountable decision-making partner.

Organisations that embed explainability into their AI strategies today will be better positioned to navigate regulatory complexity, earn stakeholder confidence, and sustain long-term growth. In a world increasingly shaped by intelligent systems, the question is no longer whether AI can make accurate decisions, but whether those decisions can be clearly understood and responsibly governed.

Explainability is not the future of AI. It is the standard that defines its legitimacy.

Build AI systems that can be trusted, governed, and defended.

Discover how Cogent Infotech helps organizations embed explainable AI into their enterprise strategy, ensuring transparency, regulatory readiness, and ethical accountability without compromising performance.

Connect with our experts to make explainability a core strength of your AI initiatives.

%402x.svg)