Serverless Security and Zero Trust: Strategies for End-to-End Protection in Cloud-Native Environments

Introduction

Serverless computing delivers agility and scalability, but it also introduces new security challenges. Traditional perimeter-based models fall short in cloud-native, ephemeral environments. Adopting a Zero Trust security approach, centered on least privilege IAM roles, Runtime Application Self-Protection (RASP), Policy-as-Code, and real-time monitoring, is essential to protect serverless workloads. By securing function-level access, API calls, and runtime behavior, organizations can reduce attack surfaces and enforce continuous verification. Integrating these strategies into your CI/CD pipelines builds a resilient, end-to-end security posture. In a world of dynamic applications, combining serverless architecture with Zero Trust is the key to future-ready, secure development.

Serverless Security and Zero Trust

In an era defined by rapid innovation, automation, and scale, serverless computing has emerged as a cornerstone of modern software development. It enables organizations, from agile startups to global enterprises, to deploy and scale applications without the operational burden of managing infrastructure. As part of the broader cloud-native movement, serverless architectures support faster development cycles, reduced costs, and greater adaptability. However, these benefits come with new and urgent challenges, particularly regarding security.

Traditional security models, built around fixed perimeters and long-lived servers, no longer apply in environments where workloads are transient, event-driven, and spun up or down in milliseconds. In serverless computing, there are no persistent hosts to protect, no predictable sessions to monitor, and no clear perimeter to defend. These fundamental shifts demand a rethinking of security, especially in cloud-native environments where the pace of change is relentless and the attack surface continuously evolves.

This is where the Zero-Trust model becomes essential. Rooted in the principle of "never trust, always verify," zero-trust" security is designed for dynamic, distributed architectures. It assumes no implicit trust across users, services, or applications. Every API call, function invocation, and access request must be authenticated, authorized, and continuously validated. This approach aligns perfectly with the ephemeral and decentralized nature of serverless environments.

In this article, we explore how to apply Zero Trust principles specifically to serverless workloads, focusing on four strategic areas critical to cloud-native security:

- Designing fine-grained IAM roles and implementing least privilege access tailored to Function-as-a-Service (FaaS) platforms

- Securing workloads at runtime through Runtime Application Self-Protection (RASP)

- Monitoring ephemeral functions effectively without traditional agents or persistent logs

- Enforcing security policies at scale using policy-as-code frameworks for functions and APIs

Organizations can achieve scalability and resilience by combining serverless agility with Zero Trust discipline, transforming modern cloud-native infrastructure into a secure, future-ready foundation.

Understanding Zero Trust Principles

What Is Zero Trust?

Zero Trust is a modern cybersecurity framework built on "never trust, always verify." Unlike traditional security models that rely on trusted network perimeters or IP-based controls, Zero Trust assumes that no request, whether from inside or outside the network, should be implicitly trusted. Instead, each access attempt must undergo strict identity verification, authorization, and continuous monitoring.

At its core, Zero Trust is designed for today's highly dynamic, distributed environments where users, applications, and services span across networks, clouds, and devices. It is especially relevant in cloud-native ecosystems, such as serverless architectures, where functions execute on demand, infrastructure is abstracted, and perimeter-based defenses are no longer viable.

Key principles of the Zero Trust model include:

- Continuous Verification: Every request must be authenticated and authorized based on user identity, device posture, and contextual factors, regardless of its origin.

- Least Privilege Access: Users and services are granted only the minimum permissions necessary to perform their tasks, nothing more.

- Micro-Segmentation: Workloads and resources are isolated into smaller zones, reducing the risk of lateral movement in case of a breach.

- Continuous Monitoring and Analytics: Security teams monitor real-time behavior patterns and system interactions to detect anomalies, enforce policies, and respond to threats proactively.

Why Zero Trust Matters in Serverless Environments

Serverless architectures radically change how applications are built and deployed. Code is organized into discrete, event-driven functions that scale automatically and execute in stateless containers managed by cloud providers. While this design promotes agility, it also eliminates the infrastructure visibility and control that traditional security models depend on.

Here are the key challenges that make Zero Trust essential in serverless environments:

- No Fixed Perimeter: In a serverless setup, functions interact with cloud services, databases, APIs, and external endpoints. Without clear network boundaries, perimeter-based defenses like firewalls become ineffective.

- Dynamic and Ephemeral Workloads: Serverless functions spin up and down in milliseconds. This short lifespan makes it challenging to track workloads using conventional monitoring or agent-based tools.

- Increased API Exposure: Serverless applications rely heavily on APIs to integrate various services. Attackers can exploit misconfigured or insecure APIs to access sensitive data or compromise functions.

- Resource Abuse and DoS Risk: Serverless platforms scale automatically, but this can be exploited in denial-of-service (DoS) attacks to exhaust resources and disrupt services.

Zero Trust directly addresses these issues by shifting the security focus from infrastructure to individual interactions:

- Every function invocation is treated as a unique security boundary.

- Access controls are enforced per API call or service request.

- Identity is verified not just at the edge but throughout the service mesh.

- Policy enforcement and monitoring are embedded into the lifecycle of each function.

By applying Zero Trust to serverless, organizations can significantly reduce the attack surface, prevent unauthorized lateral movement, and ensure that their cloud-native applications remain secure, even in the face of constantly evolving threats.

IAM Roles and Least Privilege in Function-as-a-Service (FaaS)

In a serverless architecture, where applications are composed of small, independently executing functions, Identity and Access Management (IAM) plays a foundational role in maintaining security. Every function invocation represents a potential entry point for exploitation, making it imperative to control what each function can access tightly. That's where the principle of least privilege comes in.

Defining IAM Roles in Serverless

Serverless platforms like AWS Lambda, Google Cloud Functions, and Azure Functions use IAM roles to determine what resources a function can access during execution. Unlike traditional monolithic systems, serverless functions are discrete, ephemeral, and often highly specialized.

Each function should be assigned a distinct IAM role tailored to its responsibilities. For instance, if a function is designed to read user data from a database, its IAM policy should only permit read access to that particular dataset and nothing more. Similarly, a function responsible for uploading logs to Amazon S3 should only have write permissions to a specific folder or object prefix, not blanket access to the entire bucket.

By defining IAM roles at the function level, organizations can prevent unauthorized access, minimize the blast radius of potential breaches, and support the Zero Trust principle of "never trust, always verify."

Implementing Least Privilege Access

The principle of least privilege, granting only the permissions necessary for a given task, is foundational to secure serverless design. In serverless computing, where hundreds of functions may be executing concurrently, this principle is essential, not optional. Over-permissioned roles remain one of the most common vulnerabilities in cloud-native applications.

Here's how to effectively implement least privilege in FaaS environments:

- Function-Specific IAM Roles: Each function should be assigned its own IAM role tailored to its task. Avoid using shared or global roles across services. For example, a function responsible for processing payments should not have access to user account data or messaging queues.

- Granular IAM Policies: Avoid using broad, overly permissive roles like "AdministratorAccess" or wildcards. Instead, define roles that specify exact actions on specific resources.

- Use Temporary Credentials: Leverage short-lived credentials, such as those issued by AWS Security Token Service (STS), to avoid long-lived keys that can be stolen or misused.

- Audit and Review: Monitor and review IAM role usage with logging and analytics tools. Periodic audits can identify unused or excessive permissions that may have accumulated over time, enabling organizations to refine and tighten access controls.

- Use Condition Keys: Add constraints to IAM policies, such as time-based access or IP address conditions, to restrict how and when permissions can be used.

- Automate Policy Generation: Tools like Policy Sentry can help generate least privilege policies based on actual CRUD (Create, Read, Update, Delete) operations needed by each function.

By implementing these practices, organizations significantly reduce the risk of privilege escalation and lateral movement in case of a function compromise.

Role Segmentation and Workload Isolation

Segmenting IAM roles along function boundaries enhances workload isolation and supports the zero-trust model by assuming that any individual function could be compromised. This isolation ensures a breach in one function does not cascade into broader system access.

Role segmentation also simplifies compliance, governance, and incident response. Clear mappings between functions and their permissions make it easier to audit who accessed what, when, and why.

This segmentation supports Zero Trust goals by:

- Enforcing strict separation of duties

- Preventing cross-function contamination in the event of a breach

- Simplifying compliance, audit, and policy management

For example, functions that access customer PII can be grouped under roles with stricter monitoring and alerting policies, while tasks that serve static web content may require far fewer controls.

Case Study: IAM in Action

A top university in the U.S. worked with Deloitte to update its identity and access management (IAM) system. The goal was to make it safer, make provisioning easier, and support a wide range of users, such as students, faculty, staff, and affiliates. Enforcing the least privilege with the old system was difficult because it didn't have the control and flexibility to meet changing compliance needs.

The new IAM solution ensured that users only had the permissions they needed for their roles by using entitlement, and role-based access controls. Certain groups of users, like visiting researchers, were given temporary and limited access. Provisioning and de-provisioning were done automatically based on events in the lifecycle. Business managers could see access requests and give permissions based on clear, business-friendly rights descriptions in a custom portal.

By aligning access with actual responsibilities and isolating roles across user types, the university minimized over-permissions, improved audibility, and laid the groundwork for Zero-Trust practices, mirroring the same principles required in secure serverless environments like FaaS.

Runtime Protection and RASP in Serverless

As serverless architectures gain momentum, traditional perimeter-based security models fall short. Serverless functions are ephemeral, event-driven, and highly distributed, traits that make them efficient but also more challenging to monitor and defend. Runtime Application Self-Protection (RASP) emerges as a critical solution by embedding security directly into application code, enabling real-time threat detection and mitigation from within.

What is RASP?

Runtime Application Self-Protection (RASP) is a security technology that operates within the application. Unlike external tools such as firewalls or web application firewalls (WAFs), RASP solutions monitor and intercept events during runtime, allowing applications to detect, diagnose, and block malicious behavior as it occurs. This is particularly relevant in serverless environments, where traditional host-based or network-level monitoring tools have limited visibility.

Why RASP Matters for Serverless

Serverless functions, such as those on AWS Lambda or Azure Functions, are short-lived, stateless, and run in fully managed environments. These characteristics improve scalability and reduce operational overhead but introduce new security challenges. The dynamic and transient nature of serverless computing makes applying conventional controls like host-based intrusion detection or endpoint protection difficult.

RASP addresses these limitations by offering:

- Application-layer visibility: Embedded directly into functions, RASP understands application logic and context.

- Real-time response: Malicious input can be blocked immediately before it exploits a vulnerability.

- Minimal dependency on infrastructure: RASP doesn't rely on persistent OS-level agents, making it ideal for serverless workloads.

Best Practices for Implementing RASP in Serverless

- Use Lightweight, Serverless-Compatible RASP Tools. Choose RASP solutions designed explicitly for ephemeral environments. These tools should support agentless deployment or lightweight instrumentation via SDKs, Lambda Layers, or cloud provider-native integrations.

- Monitor Behavioral Patterns: Track execution flow, API calls, file access, and outbound network connections. Any deviation from established behavior, such as unusual query patterns or access attempts, should trigger alerts or automatic interventions.

- Automate Threat Response. Upon detecting a threat, RASP tools should terminate suspicious executions, rotate credentials, or trigger alerts. Automation reduces dwell time and limits damage in real time.

- Integrate with CI/CD Pipelines Incorporate RASP configurations and policies into your CI/CD workflows to ensure security is embedded throughout the development lifecycle. This integration can be facilitated by tools like GitHub Actions, AWS CodePipeline, and Azure DevOps.

- Combine with Pre-Deployment Scanning Use tools like Snyk, GitHub Dependabot, or AWS CodeGuru to detect known vulnerabilities in dependencies. RASP complements this by protecting against threats that emerge post-deployment.

Leading Tools for Serverless Runtime Protection

- Trend Micro Cloud One: Application Security provides lightweight RASP for cloud-native apps, including AWS Lambda and Azure Functions.

- Contrast Security: Offers code-level instrumentation and behavior-based threat detection for microservices and serverless functions.

- Datadog Application Security (via Sqreen) delivers runtime protection tailored to serverless workloads, with support for real-time blocking and observability.

- Imperva RASP: Features language-theoretic analysis to detect and neutralize known and zero-day attacks.

- PyRASP: A lightweight, open-source RASP library designed for Python functions in AWS Lambda and other FaaS platforms.

Challenges and Considerations

While RASP offers valuable protection, serverless-specific constraints require careful consideration:

- Cold Start Overhead: RASP instrumentation can increase cold start latency; solutions should be optimized for fast initialization.

- Limited OS Visibility: Lack of access to the underlying infrastructure requires creative instrumentation methods.

- Distributed Tracing: Functions are often part of complex event chains. For effective detection, RASP tools must support distributed tracing and correlation.

In an environment where applications run on-demand and in isolation, securing workloads at runtime is no longer optional. RASP equips serverless applications with the ability to detect and neutralize threats from within, reinforcing zero-trust principles. When combined with proactive vulnerability scanning and least-privilege design, RASP ensures that serverless architectures remain agile and secure.

Monitoring and Alerting for Ephemeral Workloads

Traditional monitoring tools fall short in a serverless architecture, where workloads are transient and functions may exist for only milliseconds. Yet, in a Zero-Trust environment, visibility is not optional, it is essential. Effective monitoring and alerting help ensure secure, performant, and reliable operations, even when the underlying compute resources are fleeting.

The Challenges of Monitoring Serverless Architectures

Serverless workloads are inherently ephemeral: they spin up on demand, perform their tasks, and terminate, often in milliseconds. This transient lifecycle presents a fundamental challenge for traditional monitoring tools, which rely on persistent agents or hosts to collect telemetry. As a result, capturing meaningful security and performance insights requires purpose-built observability strategies tailored to the dynamic nature of serverless environments.

Beyond their short lifespans, serverless functions typically operate in highly distributed, event-driven architectures. This makes it harder to trace individual transactions, detect misconfigurations, or correlate anomalous behaviors across services without centralized instrumentation.

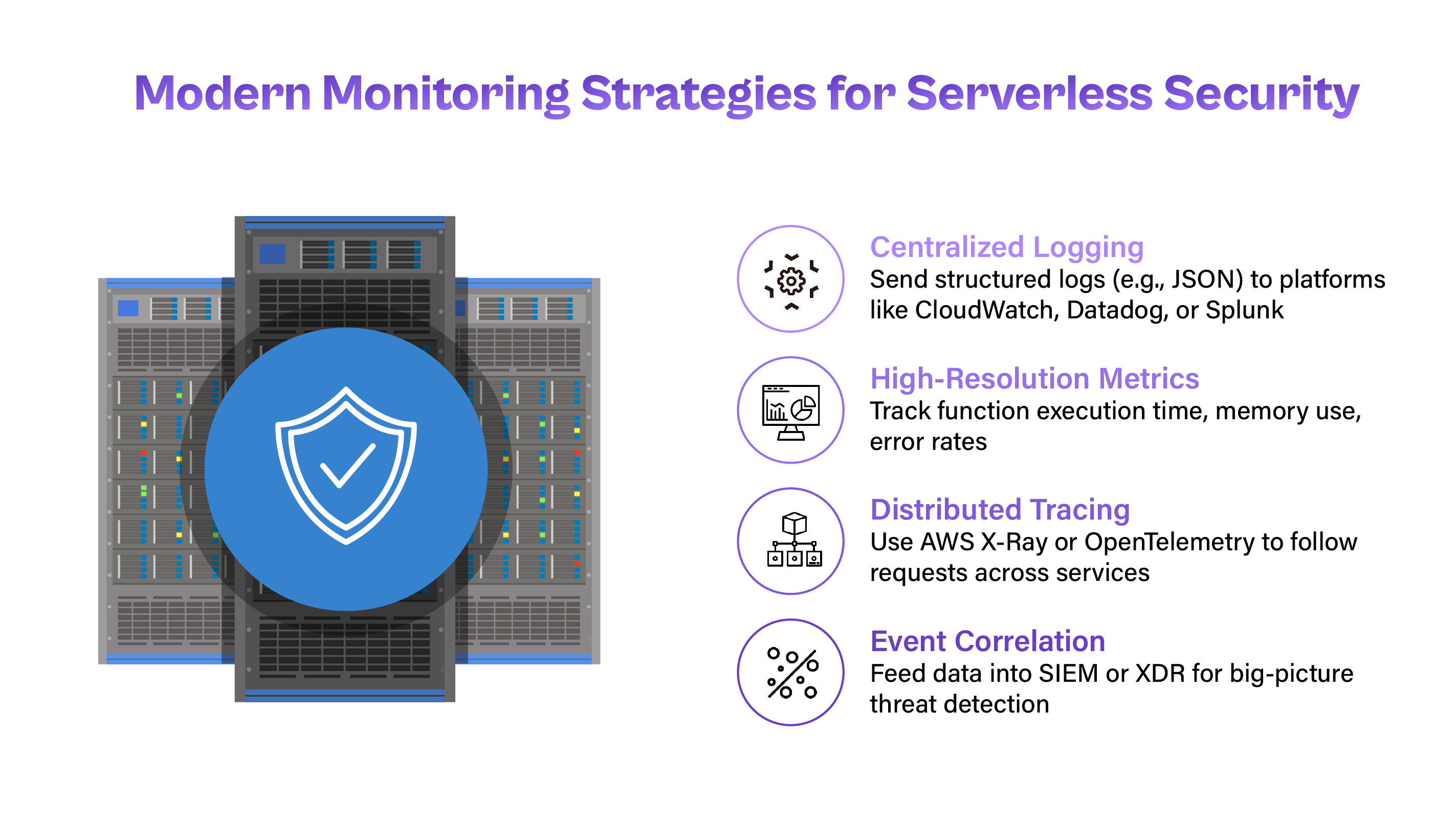

Strategies for Effective Monitoring

To monitor ephemeral workloads effectively, organizations must embrace cloud-native, serverless-aware observability solutions.

- Centralized Logging: Route all logs to a unified log aggregation platform such as AWS CloudWatch, Azure Monitor, Google Cloud Logging, or third-party solutions like Datadog or Splunk. Structured logging (e.g., JSON format) improves the ease and accuracy of downstream analysis.

- High-Resolution Metrics: Collect detailed metrics at the function level, such as execution time, memory usage, invocation count, and error rates, to monitor operational health and identify performance regressions or security anomalies.

- Distributed Tracing: Implement tools like AWS X-Ray or OpenTelemetry to trace the flow of individual requests across multiple serverless functions and services. Tracing enables the detection of latency bottlenecks, service dependencies, and misbehaving components in complex architectures.

- Event Correlation: Feed telemetry data into a Security Information and Event Management (SIEM) or Extended Detection and Response (XDR) system to correlate across data sources, enabling threat detection beyond isolated function behavior.

Setting Up Alerts for Anomalous Behavior

- In a Zero Trust environment, monitoring alone is insufficient; alerts must be configured to detect and respond to misconfigurations and malicious activity. Effective alerting includes:

- Threshold-Based Alerts: Set predefined limits for key metrics like execution time, memory consumption, error rates, and cold start durations. Alert when thresholds are breached, especially when deviations are sustained or unexpected.

- Anomaly Detection with Machine Learning: Use cloud-native or third-party anomaly detection systems that apply statistical or machine learning models to identify patterns inconsistent with historical baselines. For example, detecting a spike in invocations from a new IP address or an unusual access pattern to a storage bucket.

- Security-Focused Triggers: Monitor for signs of malicious activity, such as unauthorized access attempts, permission errors, or excessive retries. Integration with AWS CloudTrail, Azure Activity Logs, and tools like AWS GuardDuty can enrich detections.

- Integration Notification System: Route alerts into automated incident management platforms such as PagerDuty, Opsgenie, or Slack-based workflows. Ensure alerts trigger tickets, notifications, or playbooks for quick triage and remediation.

Best Practices

- To make monitoring and alerting effective in a serverless context:

- Establish Baselines: Define normal behavior per function and environment so that deviations can be detected more accurately.

- Minimize Alert Fatigue: Tune thresholds to reduce false positives. Use adaptive alerting where possible.

- Correlate Across Layers: Combine data from serverless functions, APIs, identity providers, and data stores to identify multi-vector attacks.

- Audit and Iterate: Continuously evaluate alerting logic and telemetry coverage as new functions are deployed or modified.

Effective monitoring and alerting for ephemeral workloads requires a mindset shift from host-centric visibility to event-driven, real-time observability. With the right strategy and tools in place, organizations can gain deep visibility into even the most short-lived workloads, and respond rapidly when things go wrong.

Policy-as-Code for Functions and APIs

Maintaining consistent security governance is essential in serverless and API-driven environments, where deployments are frequent and dynamic. Policy-as-Code (PaC) is a foundational practice that aligns with Zero Trust principles by codifying security and compliance policies into machine-readable formats, making them version-controlled, testable, and automatable.

What is Policy-as-Code?

Policy-as-Code enables organizations to define and manage rules governing access, resource usage, and behavior through code. These policies are stored in version control systems, can be tested like any software artifact, and are automatically enforced through CI/CD pipelines or at runtime.

Implementing Policy-as-Code in Serverless Environments

Implementing PaC in serverless environments involves integrating policy definitions into the infrastructure and deployment process. Key strategies include:

- Declarative Policy Definitions:Define what actions each function can perform and which resources it may access, adhering to the principle of least privilege. Use tools like Open Policy Agent (OPA) or HashiCorp Sentinel to express policies in declarative formats (e.g., Rego), enabling fine-grained control over function behavior, resource access, and data handling.

- Infrastructure as Code Integration: Embed policies within IaC frameworks like AWS SAM, Serverless Framework, Terraform, or CloudFormation. This allows policy enforcement to happen as part of infrastructure provisioning.

- CI/CD Pipeline Integration: Integrate policy checks into your continuous integration and deployment pipelines. Use tools like Conftest to validate configurations and catch violations early before the code is deployed to production.

- Runtime Enforcement via API Gateways: Apply policies directly to APIs using API gateways (e.g., AWS API Gateway, Kong). Gateways can enforce rules such as rate limiting, input validation, IP whitelisting, and authentication/authorization checks.

- Version Control and Change Management: Store policy code in version-controlled repositories (e.g., GitHub, GitLab) to enable collaboration, auditing, and rollback capabilities.

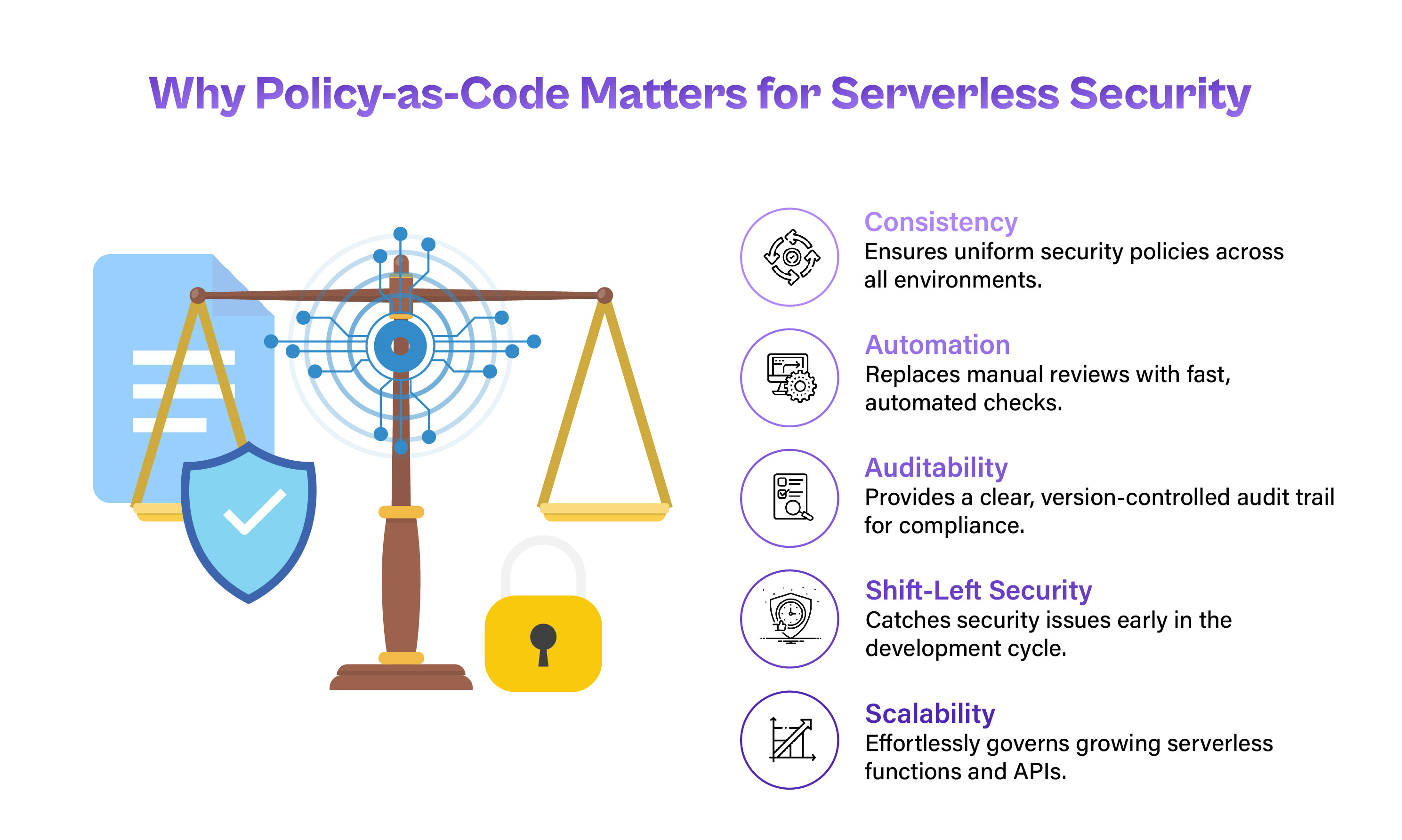

Key Benefits of Policy-as-Code for Serverless Security

Policy-as-Code provides several key advantages that align with the principles of Zero Trust and modern DevSecOps practices.

- Consistency: Enforces uniform policy application across development, staging, and production environments, reducing misconfiguration risks.

- Automation: Replaces manual reviews with automated checks and runtime enforcement, improving efficiency and reducing the potential for human error.

- Auditability: Policies stored as code provide a clear audit trail for compliance purposes. Changes can be reviewed, tested, and rolled back as needed.

- Shift-Left Security: By integrating policy validation into the early stages of development, security concerns are addressed proactively—before they become production incidents.

- Scalability: As serverless applications grow, PaC provides a scalable way to govern a large number of functions and APIs without centralized bottlenecks.

Common Use Cases

- IAM Policy Enforcement: Automatically block deployments that request overly permissive roles or cross-boundary access.

- API Governance: Enforce authentication rules, limit public endpoints, and ensure input conforms to expected schemas.

- Data Loss Prevention (DLP): Prevent functions from transmitting sensitive data to unapproved external services.

- Compliance Checks: Ensure encryption is at rest, sensitive operations are logged, and retention policies are properly configured.

Policy-as-Code is a cornerstone for developing secure, scalable, and agile serverless applications. By integrating policy enforcement directly into the lifecycle of functions and APIs, organizations can uphold zero-trust principles while maintaining the pace of innovation. This approach shifts security from a reactive measure to a proactive, continuous safeguard, which is essential for protecting ephemeral, event-driven, serverless environments.

Conclusion

Serverless computing has redefined how modern applications are built and scaled, offering unparalleled agility, cost-efficiency, and speed. However, this paradigm also introduces unique security challenges that traditional models weren't designed to handle. Organizations must adopt new strategies that match this dynamic environment as functions spin up and down on demand and infrastructure becomes abstracted.

Zero Trust offers a proven framework for navigating this complexity. By applying its core tenets, never trust, always verify, organizations can build serverless systems that are both resilient and secure. Implementing strict IAM policies with the least privilege access, embedding runtime protection through RASP, enabling observability for ephemeral workloads, and adopting Policy-as-Code are all essential pillars of this approach.

Together, these practices create an end-to-end security posture that minimizes risk and supports innovation at scale. Security is no longer a bottleneck, it has become an integrated, automated, and continuous process.

As serverless architectures continue to evolve, so too must our security mindset. Zero Trust is not a one-time implementation but a cultural shift: one that places security at the core of every function, API, and deployment pipeline.

Secure your serverless future with Cogent Infotech.

Backed by 21 years of experience and 10k+ completed projects, our cloud-security specialists design and implement Zero-Trust architectures, combining granular IAM, RASP, policy-as-code, and real-time observability, to protect your serverless workloads without sacrificing agility.

Contact Us Now & turn innovation into resilient security.

%402x.svg)

.jpg)

.jpg)