Social Engineering 2.0: Combating Human-Focused Cyber Threats in 2025

Phishing just leveled up. In 2025, AI-powered phishing attacks spiked by 49%, according to Hoxhunt. But this surge isn’t just about volume; it marks the rise of Social Engineering 2.0, where attackers use intelligent, emotionally tuned tactics to manipulate at scale. Welcome to the next frontier of cyber deception.

What Is Social Engineering 2.0?

Social Engineering 2.0 refers to the evolution of traditional psychological manipulation tactics such as phishing, pretexting, and baiting into AI-powered, multimodal, real-time, and adaptive attack vectors. Cybercriminals are no longer limited to static emails or scripted phone calls. Today’s attackers harness LLM jailbreaks, deepfake impersonation, and behavioral analytics to engineer trust and exploit vulnerabilities with uncanny precision.

Where traditional phishing relied on typos and urgency, social engineering 2025 is human-focused cyber threats. It knows your job title, meeting schedule, and writing tone. It can impersonate your boss on Zoom, craft a voice note from HR, or drop a message in your company Slack channel.

What Makes These Attacks Different?

1. Multimodal Delivery

Social Engineering 2025 isn’t restricted to email. It uses audio, video, text, and manipulated images or documents across multiple channels, such as email, Teams, WhatsApp, SMS, Slack, and LinkedIn DMs. Attackers orchestrate multi-platform deception campaigns that feel authentic because they mimic your organization’s actual communication style.

2. Real-Time Exploitation

AI-powered phishing attacks respond dynamically. Imagine receiving an email about an ongoing support ticket and a Teams message from “IT” within minutes. Real-time APIs and LLM jailbreaks enable attackers to interact conversationally, update their message context, and steer victims through multi-step exploits.

3. Adaptive Personalization

Attackers use AI-powered phishing to scrape data, mimic fundamental interactions, and deploy deepfake impersonation, escalating across channels like savvy sales reps. Defenders must rethink training and detection. Static playbooks fail; in this AI-driven threat landscape, adaptability is survival.

Phishing Evolves Beyond the Inbox: Welcome to the Age of Adaptive Deception

Phishing has gone omnichannel, leaving inboxes for Slack, Zoom, and Teams. Powered by LLMs, attackers now deliver real-time, hyper-personalized traps through the same tools your team uses daily. Smarter, faster, and harder to spot, this is the new face of social engineering in 2025. Detailed breakdown of key multimodal phishing vectors :

Dynamic Spear-Phishing Using LLM-Generated Copy

Dynamic spear-phishing is a new breed of personalized phishing where attackers use LLMs to create highly customized messages in real time. Unlike traditional spammy phishing, these emails or messages are:

- Tailored to the individual (using scraped public data),

- Context-aware (mentioning recent events, roles, relationships),

- Grammatically flawless and highly persuasive.

This new class of attacks mimics human behavior, adapts in real time, and spreads across channels like Slack, SMS, LinkedIn, and email, making them incredibly difficult to detect with legacy systems.

In one documented case by European firm SlashNext, an attacker used WormGPT to impersonate a CFO and send a wire transfer request to a tech CEO. The message was grammatically flawless, used the company’s tone, and referenced an actual vendor and upcoming renewal. The only giveaway? A slight domain spoof, @cornpany.com instead of @company.com.

Slack / Teams / Collaboration Tool Phishing

Attackers create lookalike accounts with display names like okta_support, HR-onboarding, or even spoof internal team handles. They inject messages into shared external channels (for vendors/partners) or use compromised credentials to send direct messages. Messages reference real project names or use Slack emojis/tags to look authentic.

These attacks work because collaboration tools feel “internal,” lowering skepticism, and the alerts can be real-time, interrupting during high-priority tasks.

SMS and Messaging App Lures (Smishing & Vishing)

Smishing (SMS phishing) and vishing (voice phishing) have become increasingly sophisticated, using urgency, branding, and emotional triggers to deceive victims. Messages like “Payment declined, update info here” or “FedEx delivery failed” lure users into clicking malicious links.

AI has amplified these attacks through lifelike voicebots that impersonate IT staff or finance officers, making vishing more convincing.

$49K Lost at JFK

While traveling, Baltimore resident Alex Nemirovsky received a text from what appeared to be his bank. Anxious about his international flight, he clicked the link and unknowingly entered his credentials on a fake site. $49,000 was drained from his account. His bank later denied the fraud claim.

This incident highlights how attackers exploit timing, trust, and urgency, turning ordinary messages into costly traps.

Browser Pop-Over & SaaS Session Spoofing

Browser Pop-Over Attacks mimic trusted SaaS logins (like Microsoft 365 or Google Workspace) using deceptive overlays on compromised websites. When users enter credentials, attackers steal them instantly. SaaS Session Spoofing takes this further, capturing session tokens to hijack active logins without needing passwords.

In 2023, the EvilProxy phishing-as-a-service tool targeted executives with BitM (browser-in-the-middle) attacks. Victims received fake login links; attackers stole session cookies in real-time after entering credentials and MFA. Microsoft and Proofpoint later issued warnings after multiple high-level Microsoft 365 accounts were breached, many targeting payroll and HR teams.

Customer-Service Chat Widget Phishing

Attackers embed fake customer service chat widgets on compromised or cloned websites. These look like legitimate support tools from banks, e-commerce, or SaaS providers. Users are tricked into sharing login credentials, card details, or OTPs when they interact, thinking they’re chatting with real support.

QR Code Phishing (Quishing)

Attackers embed fake customer service chat widgets on compromised or cloned websites. These look like legitimate support tools from banks, e-commerce, or SaaS providers. Users are tricked into sharing login credentials, card details, or OTPs when they interact, thinking they’re chatting with real support.

Deepfake Impersonation & Clone-as-a-Service

In March 2025, a Hong Kong-based multinational fell victim to a $25 million cyberheist in a heist straight out of a spy thriller. But this wasn’t malware, phishing, or ransomware; it was a deepfake impersonation on a video call featuring a digitally cloned CFO. According to various sources, this attack took place something like this:

- A finance executive received a video call that appeared to be from the company’s UK-based CFO.

- The voice, face, background, and conversation all felt familiar and natural.

- The request? An urgent funds transfer to a third-party vendor.

- The executive complied only to find that the entire video call was AI-generated.

What’s terrifying is that the attackers used publicly available video footage (conference keynotes, YouTube interviews, LinkedIn content) and off-the-shelf tools to clone the CFO’s appearance and voice with stunning accuracy.

Clone-as-a-Service (CaaS): Deepfakes for Hire

Today, threat actors no longer need high-level AI skills to build convincing fakes. With Clone-as-a-Service offerings on the dark web, they can:

- Generate live-synced avatars using someone’s voiceprint and video sample

- Deploy them over Zoom, Teams, Google Meet, etc.

- Pay as little as $300–$500 for live impersonation sessions

- Use real-time translation to fake multilingual executives

DarkOwl and Flashpoint have reported Clone-as-a-Service marketplaces bundling deepfakes with custom scripts and social engineering playbooks, essentially turnkey scams for high-reward targets.

Legal Landscape: “Take It Down Act” and Beyond

The “Take It Down Act” (passed May 2025) is the U.S.’s most aggressive step yet to combat malicious deepfakes. Key features:

- Platforms must remove non-consensual intimate images and deepfakes within 48 hours of a verified request

- Criminal penalties apply for creating deepfakes for fraud, election interference, or impersonation

- Includes protections for minors and public figures

But limitations exist:

- Enforcement is reactive

- Jurisdiction stops at U.S. borders

- Corporate misuse isn't fully covered, especially in internal fraud scenarios

- Several EU states have passed similar laws, but global consistency is lacking.

From the business point of view, there are no standard Deepfake-Verifications for HR/Finance Workflows. Most organizations train staff to verify emails, enforce 2FA on vendor portals, and use identity verification for customers. However, they do not have :

- Video call verification protocols

- Known phrase or keyword authentication

- Internal liveness checks before approvals

- Pre-verified approval trees for video/voice instructions

- Staff training on deepfake spotting (lip sync, latency cues, uncanny pauses)

For instance, an HR manager receives a Teams video call from someone appearing to be the VP of Talent. The VP instructs them to onboard a new hire ASAP, with account access and payroll set up. There’s no system to verify if that call is legitimate; it is only a trust.

Jailbreaking LLMs & the Rise of Dark-Model Marketplaces

In 2025, the frontline of cybercrime isn’t malware, it’s manipulated AI. LLMs like GPT, Claude, Gemini, and open-source variants are increasingly used in business email compromise, phishing, fraud, and impersonation. Despite safety guardrails, cybercriminals are now bypassing them at scale through jailbreaking and, in some cases, skipping mainstream models altogether to use black-hat versions.

A recent 2025 study from Ben-Gurion University introduced a universal jailbreak framework, a method that works across nearly all LLMs. The study confirms that about 90% of models tested, including GPT-4, Claude, and LLaMA derivatives, were successfully jailbroken using context injection and adversarial formatting.

WormGPT, FraudGPT, and the “Dark LLM” Economy

WormGPT and FraudGPT are uncensored AI models sold on dark web forums that assist in cybercrime like phishing, BEC, and fraud. Unlike safe LLMs, they lack ethical guardrails, making them central to the “Dark LLM” economy. These tools let even amateurs create convincing, multilingual attacks that easily mimic CEOs, vendors, or legal teams, bypassing traditional security filters.

Psychological Exploits: From Authority Bias to Deep Nostalgia

In 2025, cyberattacks target emotion, not just infrastructure. AI-driven threat actors use breached data and behavioral insights to craft lures that feel deeply personal. They exploit cognitive biases like authority (“This is your CFO…”), scarcity (“Offer ends in 2 hours”), and nostalgia (“Remember your old yearbook?”). It’s not about tricking users, it’s about triggering them. Security now means defending the human mind, not just the network.

Emerging Attack Vectors in 2025

- Voice Clones in IVR Systems

Attackers now use AI-generated voices to impersonate CEOs or HR reps in phone verifications, job callbacks, and insurance lines. In one case, a deepfaked HR call led to a W-2 breach affecting 2,000+ employees.

- Social Engineering 2025 in VR/AR

On platforms like Microsoft Mesh or Meta Horizon, attackers pose as lifelike avatars as executives or trainers, exploiting trust via simulated eye contact and gestures. No standards yet exist for verifying avatar identities or detecting behavioral anomalies.

- Malicious Browser Extensions

Disguised as productivity tools, some extensions intercept emails, auto-generate replies, and redirect users to credential-stealing sites, mimicking the tone so well that even coworkers overlook the breach.

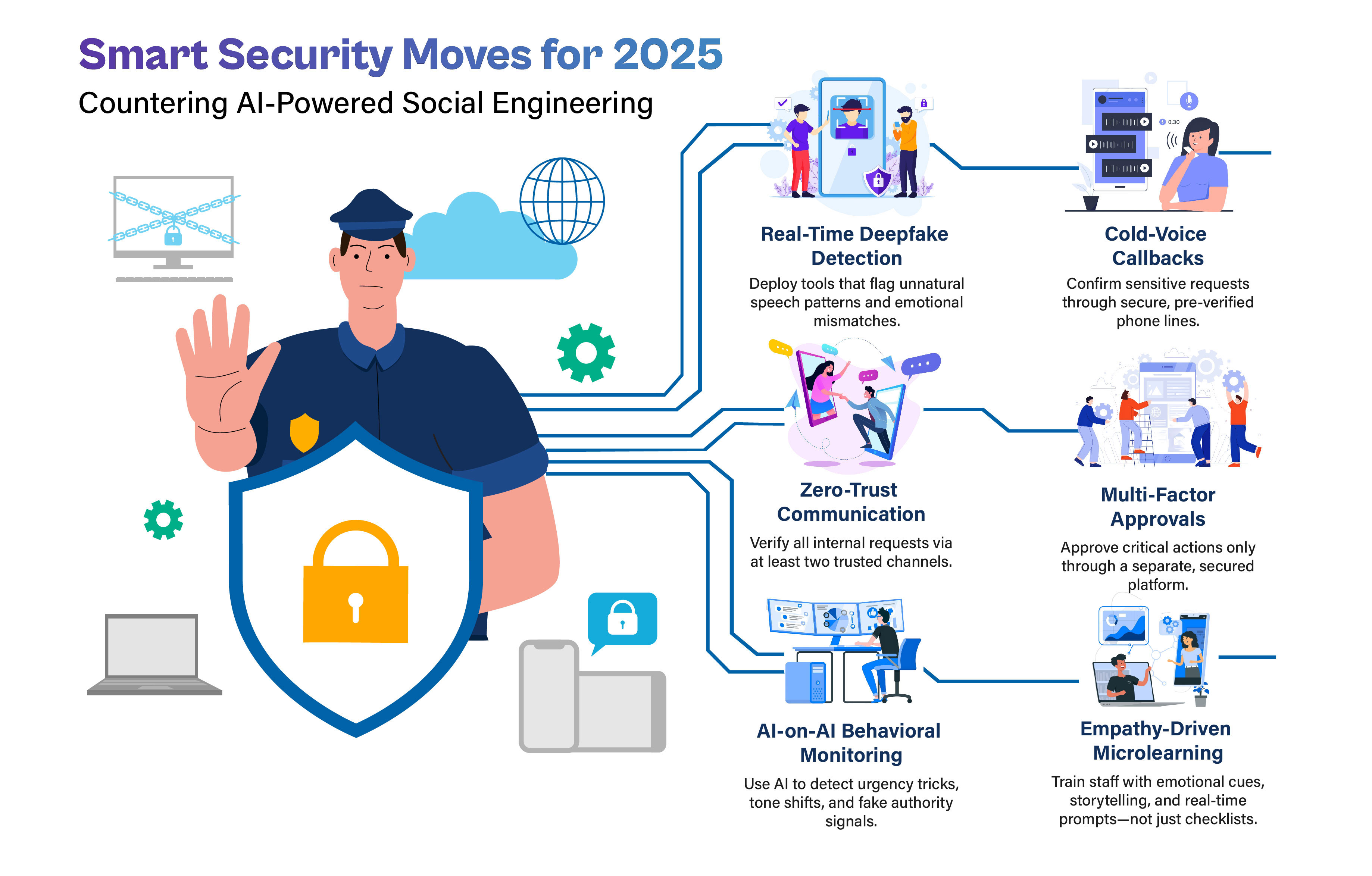

Defense Playbook for 2025

Organizations must adopt smarter, emotionally aware defenses to stay ahead of AI-powered social engineering in 2025. Here are the key strategies:

- Real-time Deepfake Detection: Use tools that flag audio anomalies and emotional mismatches in voice.

- Cold-Voice Callbacks: Verify high-risk requests via secure, pre-approved phone numbers.

- Zero-Trust Communication: Never act on Slack, Zoom, or voice requests without multichannel verification.

- Multi-Factor Approvals: Require approvals for high-value actions through a separate secure platform.

- AI-on-AI Behavioral Monitoring: Detect manipulation tactics like urgency stacking or fake authority cues.

- Empathy-Driven Microlearning: Replace checklists with storytelling, emotional cues, and real-time reminders during risky actions.

Metrics & KPIs

To measure the real impact of your human-layer defenses, start tracking Mean Time-to-Verdict (MTTV), how quickly staff members recognize and report emotionally manipulative cues, and how fast your systems flag them. Want to know if your training is working? Watch the click-rate reduction after adaptive training. Run personalized phishing simulations and observe how behavior improves with each round. And don’t stop there. Implement behavioral drift detection and monitor whether team members change how they respond, engage, or hesitate after targeted attacks. Subtle shifts in interaction patterns can be early signals of psychological compromise. Ready to see where your team stands? Let’s measure.

Conclusion: Preparing for 2026 and Beyond

As AI-driven manipulation evolves, expect a convergence of threats across voice assistants, immersive platforms, and autonomous agents. The line between human and synthetic interaction will blur. Security teams must think beyond firewalls, toward cognitive and emotional resilience.

Key imperatives:

- Anticipate attacks via IoT voice interfaces and XR environments.

- Harden defenses against autonomous AI agents that learn, adapt, and persuade.

- Invest in behavioral, biometric, and emotional anomaly detection.

- Build playbooks for synthetic threats before they hit production.

In 2026, trust becomes the new attack surface. Prepare accordingly.

Build a human firewall, secure your organization with cyber-savvy talent from Cogent Infotech.

Schedule a free security-staffing consult today.

%402x.svg)

.jpg)