Beyond the Crystal Ball: AI In Forecasting for Smarter Operations

A Gartner report indicates that about 70% of enterprises report significant operational gains from integrating machine learning forecasting models into real-time workflows. A powerful example of this shift is unfolding at the Frankfurt airport. Lufthansa and airport operator Fraport, through their tech arm zeroG, have deployed an operational AI camera system known as “seer”. This computer vision solution continuously monitors aircraft turnaround operations, from bridge docking to baggage handling and refueling. “Seer” timestamps every step, delivers real-time visibility across all ground handling teams, and helps optimize processes on the fly. As Jens Ritter, CEO of Lufthansa Airlines, notes, “Transparent ground processes enable us to further improve our punctuality and service quality.”

This use case embodies operational AI at its core, a transformation from fixed, delay-prone procedures to fluid, data-informed orchestration. In the minutes when aircraft are not in the air lies a massive margin for efficiency. Real-time analytics, in this context, becomes prescriptive, connecting data streams across functions in real-time to reduce delays, resource waste, and even emissions.

This article examines the evolutionary arc of AI in forecasting as it becomes an operational imperative. Scalable neural networks, real-time analytics, and streaming pipelines, along with cloud-native BI tools, are redefining forecasting paradigms. Static, rule-based logic is being replaced by adaptive, high-dimensional machine learning models. Streaming analytics, using platforms like Kafka and Azure Stream Analytics, now enable decisions at the speed of operations. The impact extends across supply chain resilience, workforce optimization, dynamic pricing, and energy grid balancing. Tools such as Facebook Prophet, DeepAR, BigQuery ML, and AI-enabled BI stacks like Power BI with Azure ML are driving these advances. However, business-critical use also brings risks. Overfitting, model drift, and data bias require strict governance to ensure accuracy, reliability, and trust.

Our goal is to provide data scientists, CIOs, and digital operations teams with a blueprint for embedding time series prediction and machine learning forecasting models as a real-time engine in their operational planning and execution frameworks.

From Rules to Neural Networks

In the pre-AI era, forecasting largely relied on time series prediction models like ARIMA (AutoRegressive Integrated Moving Average) and exponential smoothing, which assume a relatively stable underlying data-generating process. For example, ARIMA models fit past data using autoregressive terms, differencing, and moving averages, expressed as:

yt=c+ϕ1yt−1+ϕ2yt−2+...+θ1ϵyϵt-1

where yt is the value at time t, ϕ are autoregressive coefficients, θ are moving average coefficients, and ϵ is white noise.

While effective for stationary time series, these models struggle when markets are volatile, data relationships are nonlinear, or input sources are heterogeneous. Consider a retail chain predicting holiday demand: if consumer behavior suddenly shifts due to viral social media trends or geopolitical events, a static ARIMA model often misfires because it lacks adaptive learning mechanisms.

Exponential smoothing methods, like Holt-Winters, apply weighted averages where recent observations have exponentially greater influence. While robust for stationary or slowly changing systems, these models falter in high-dimensional, non-stationary environments typical of modern operations. Markets, supply chains, and transportation networks now operate under conditions where seasonality shifts mid-year, exogenous shocks cascade in hours, and complex interdependencies render linear assumptions inadequate. For example, predicting airline gate turnaround times during a snowstorm while also accounting for crew schedules, inbound delays, weather radar feeds, and real-time baggage handling status creates a data landscape that ARIMA simply cannot handle without massive feature engineering and repeated manual recalibration.

The operational AI revolution in forecasting replaces this static, rule-driven logic with adaptive, nonlinear models that can learn from vast, multi-source datasets in real-time. Neural network architectures, particularly Recurrent Neural Networks (RNNs), Long Short-Term Memory networks (LSTMs), and Transformers, excel at capturing temporal dependencies, nonlinear interactions, and latent patterns that classical models overlook. LSTMs, for instance, introduce gating mechanisms (input, forget, output) to selectively retain or discard information across long sequences, mitigating the vanishing gradient problem inherent in vanilla RNNs. Mathematically, the LSTM cell state update is expressed as:

Ct = ft ⊙ Ct−1 + it ⊙ C̃t

Where ft is the forget gate, it is the input gate, and C̃t is the candidate state. This mechanism enables models to simultaneously account for long-term seasonal patterns (e.g., annual demand spikes) and short-term anomalies (e.g., a one-day weather-driven surge). Transformers push scalability further by replacing recurrent loops with parallelizable self-attention mechanisms, allowing the model to weigh and relate every time step to every other. This is critical for high-frequency, multivariate forecasting in environments with thousands of signals per second.

In practice, these time series prediction models are fed heterogeneous, high-velocity data streams: IoT sensor telemetry, transactional logs, third-party APIs, satellite imagery, and even NLP-processed maintenance reports. The feature space can easily reach tens of thousands of variables, making manual feature crafting infeasible. Neural networks implicitly learn representations that capture interactions, such as how humidity, tarmac temperature, and staffing density together influence aircraft pushback time.

A case in point is Amazon, which has transitioned from classical demand forecasting to advanced neural architectures across its global supply chain. Amazon’s models incorporate billions of data points daily, from product page clicks to fulfillment center sensor data, and feed them into LSTMs and Transformer-based architectures deployed on AWS SageMaker. The output forecasts are updated in near real-time, influencing inventory distribution, dynamic pricing, and even robotic picking sequences. For example, during Prime Day, these real-time analytics models not only anticipate demand spikes for specific SKUs but also adjust replenishment schedules at regional warehouses based on weather forecasts, local purchasing power indexes, and competitor pricing trends detected via web scraping. This neural forecasting ecosystem has reportedly reduced stockouts by double-digit percentages and cut excess inventory costs by hundreds of millions of dollars annually, results that no ARIMA configuration could match in scope or speed.

The shift from ARIMA’s equation-driven simplicity to LSTM or Transformer-based architectures mirrors the broader shift from rule-based systems to learning systems. The core advantage lies in adaptability and scalability: Operational AI models ingest more data, update continuously, and detect patterns invisible to human analysts or linear models. For industries where minutes of delay can translate into millions in losses or where energy demand spikes can destabilize grids, this transition is not just technological evolution, but operational survival.

Streaming Data and Real-Time Predictions

In the modern operational stack, batch-based forecasting pipelines are no longer enough for businesses competing in milliseconds. The shift toward streaming architectures has brought platforms like Apache Kafka, Azure Stream Analytics, and Google Cloud Dataflow to the forefront. These systems are designed to ingest high-volume event streams like sales transactions, sensor readings, social media sentiment, IoT telemetry, at massive scale while preserving ordering, fault tolerance, and replayability. Kafka, for instance, decouples producers and consumers through its log-based architecture, enabling multiple downstream services (forecasting models, anomaly detection engines, dashboards) to subscribe without bottlenecks. Azure Stream Analytics and Google Cloud Dataflow enable serverless processing and windowed aggregations, allowing for continuous feature extraction and inference without complex infrastructure overhead.

The real breakthrough occurs when these streaming layers are coupled with machine learning forecasting models that continually learn. Traditional retraining cycles, such as weekly, monthly, or quarterly, introduce lag. Continuous learning pipelines update model parameters or recalibrate predictions in near real-time as new data points arrive. This can be done incrementally via online learning algorithms (e.g., stochastic gradient descent on streaming data) or by dynamically adjusting model weights in recurrent architectures like LSTMs without full retraining. The forecasts thus generated reflect the shifting trends almost as quickly as they emerge, making them resilient against sudden market shocks, weather anomalies, or unexpected supply chain disruptions.

From an operational standpoint, the impact is profound. Reduced latency in decision-making transforms both tactical and strategic workflows. A logistics operator can reroute trucks mid-transit if real-time analytics predict sudden bottlenecks in specific distribution hubs. Retailers can adjust digital shelf placements during a flash sale based on live demand spikes. Anomaly detection, embedded within these streaming pipelines, further ensures that unexpected deviations such as fraudulent transactions, sudden equipment malfunctions, or misreported inventory counts are flagged and addressed instantly. In mission-critical environments, every millisecond shaved off the decision loop can mean millions saved.

A compelling case snapshot comes from Meesho, the Indian social commerce platform, which integrated Amazon Forecast into its operational analytics. As Ravindra Yadav, Principal Data Scientist at Meesho, shared, “Weekly and daily demand predictions saw a 20% improvement in accuracy after implementing real-time forecasting pipelines, enabling better seller recommendations and inventory planning.” By combining Amazon Forecast with streaming inputs, Meesho was able to ingest behavioral signals from millions of concurrent users, apply demand models instantly, and push stock-level adjustments directly to sellers.

Another practical example is real-time retail demand forecasting during flash sales. Consider a large e-commerce player during its annual festival sale. Streaming systems ingest purchase events, cart additions, and browsing behavior as they happen. Features such as product velocity (units sold per second), conversion rate changes, and abandonment trends are computed in-flight. A neural forecasting model, trained on historical event-based spikes, projects near-future demand at SKU and location granularity. These forecasts then trigger automated procurement and warehouse allocation workflows, ensuring that high-demand items remain in stock and shipping delays are minimized. Without streaming integration, by the time batch forecasts are processed, the sale could already be over, leaving millions in unrealized revenue.

Under the hood, a typical architecture might look like this:

- Ingestion: Kafka topics or Google Pub/Sub receive events from multiple sources (e.g., ERP, web tracking, IoT).

- Processing: Azure Stream Analytics applies temporal joins and aggregates, transforming raw events into model-ready features.

- Inference: Models deployed via TensorFlow Serving, SageMaker, or Vertex AI make predictions in <100 ms latency.

- Action: BI dashboards, automated triggers, or orchestration systems (e.g., Airflow) consume predictions and execute decisions.

For advanced teams, continuous evaluation of these systems is critical. Monitoring divergence between predicted and actual values in streaming windows in real-time ensures models stay calibrated. When drift exceeds thresholds, automated retraining jobs can be triggered, using the very same streaming pipeline as a feedback loop. This closes the gap between observation and adaptation, enabling businesses to operate in a true sense-and-respond mode.

High-Impact Use Cases

The true potential of AI in forecasting emerges when models move beyond historical batch predictions and into high-frequency, adaptive decision-making environments. Across industries, neural network architectures, reinforced by real-time streaming pipelines, are already transforming the way organizations plan, allocate, and execute operations. Four sectors in particular : supply chain management, workforce scheduling, dynamic pricing, and energy grid balancing, illustrate the operational and economic magnitude of this shift.

1. Supply Chain Forecasting: Procurement and Distribution on the Fly

Traditional supply chain forecasts were often updated weekly or monthly, relying on aggregate data that masked short-term volatility. In contrast, machine learning forecasting models like LSTMs or Temporal Fusion Transformers ingest multi-source data (point-of-sale feeds, IoT sensor metrics, weather reports, geopolitical signals) in near real-time. This allows procurement managers to anticipate demand spikes days ahead and adapt shipment priorities dynamically.

For example, a global FMCG company integrated Amazon Forecast with Apache Kafka streams from its distribution centers. The system recalculated demand forecasts every hour, allowing the procurement team to reroute inventory from low-demand regions to high-demand hubs within a single shift. This agility reduced out-of-stock events by 27% and slashed excess holding costs by 15%. Such responsiveness is only possible when neural networks continuously adapt weights with each new datapoint, eliminating the lag of static statistical models.

Mathematically, the predictive function can be represented as:

ŷt+1=fθ(Xt−n:t,Zt)

Where:

- Xt−n:t = historical demand sequence (last n time steps)

- Zt = exogenous variables (weather, promotions, macroeconomic signals)

- fθ= deep learning model parameterized by θ\thetaθ

2. Workforce Scheduling: AI-Driven Staffing Optimization

Workforce management, especially in high-variability environments like hospitals, call centers, or assembly lines, benefits immensely from AI in forecasting. Instead of manual scheduling based on historical averages, RNN-based models can detect demand surges like an influx of ER admissions during flu season or spikes in call center traffic after a product launch and recommend precise staffing levels.

One Southeast Asian manufacturing firm deployed DeepAR models connected to IoT-enabled machinery logs. The system could predict downtime events and automatically adjust shift schedules to align skilled labor with expected production loads. This reduced overtime costs by 18% while boosting equipment utilization rates. In healthcare, such systems have been shown to reduce nurse understaffing incidents by up to 25%, thereby directly improving patient care quality.

3. Dynamic Pricing: Demand-Sensitive Revenue Optimization

Dynamic pricing has evolved far beyond the static “if-then” rules of early airline yield management systems. Neural networks can now process real-time search queries, booking patterns, competitor pricing data, and even weather forecasts to set optimal prices at millisecond intervals.

Consider a ride-hailing platform that integrates transformer-based demand forecasting with reinforcement learning algorithms. When a sudden rainstorm hits a city district, the system detects a surge in ride requests via Kafka streams, forecasts short-term demand peaks, and dynamically adjusts fares in response. Similarly, e-commerce giants leverage these models during flash sales to avoid both underpricing (leaving revenue on the table) and overpricing (driving away customers).

A controlled trial conducted by an online travel platform found that integrating streaming forecasts into pricing engines improved revenue per available seat kilometer (RASK) by 6.4%, a significant margin in the low-margin airline sector.

4. Energy Grid Balancing: Forecasting for Renewable Integration

In power grid operations, the unpredictability of renewable sources, like wind and solar creates balancing challenges. Machine learning forecasting models trained on weather radar data, turbine telemetry, and consumption patterns can predict fluctuations in both generation and demand with minute-level granularity.

For instance, a European utility company uses Google Cloud Dataflow to ingest continuous sensor readings from solar farms, feeding them into a temporal convolutional network (TCN) for near-real-time generation forecasts. The predictions enable grid operators to pre-emptively dispatch reserve capacity or adjust storage battery usage, maintaining stability without relying on expensive fossil fuel peaker plants.

During a 2024 pilot, the utility achieved a 12% improvement in renewable utilization rates and a 9% reduction in curtailment events, outcomes that directly translated into both economic and environmental gains.

In all these scenarios, the competitive edge lies not just in accuracy but in the ability to forecast, decide, and act in seconds. Real-time analytics, when paired with scalable, cloud-native architectures and robust data governance, enable decision-making loops that were once impossible with human-led or batch-oriented models. As industries grow more volatile and data-rich, these capabilities will define operational excellence in the next decade.

AI Tools in Use and the Risks That Come with Them

The modern forecasting ecosystem is shaped by a new breed of AI tools that make high-dimensional, multi-source predictions accessible to both data science teams and business analysts. Among these, Facebook Prophet, DeepAR, and BigQuery ML have emerged as cornerstone technologies.

Facebook Prophet is particularly valued for its ability to handle seasonality, holidays, and trend shifts with minimal tuning. Its additive model structure,

y(t)=g(t)+s(t)+h(t)+ϵt

where g(t) models the trend, s(t)models seasonality, and h(t) incorporates holidays or known events, allowing teams to capture predictable patterns while leaving room for stochastic noise. This makes Prophet highly effective for businesses with recurring seasonal spikes where domain knowledge can be explicitly encoded into the model, for example, during Balck Friday sale.

DeepAR, developed by Amazon, takes a fundamentally different approach. Built on autoregressive recurrent neural networks (RNNs), DeepAR learns from multiple related time series simultaneously. This cross-learning capability means it can identify latent patterns that traditional univariate models would miss. For example, in retail, DeepAR can learn from the sales patterns of hundreds of SKUs, enabling more accurate forecasts for a new product launch even with limited historical data. The model’s architecture supports probabilistic forecasting, returning full predictive distributions rather than point estimates critical in risk-sensitive environments where upper and lower bounds matter.

BigQuery ML brings predictive modeling directly into Google’s cloud-native data warehouse. This eliminates the need for separate ETL pipelines, allowing teams to train and deploy models (including linear regression, boosted trees, and even TensorFlow models) using SQL syntax. When coupled with streaming ingestion via Google Pub/Sub and Dataflow, BigQuery ML supports near real-time forecasting at massive scale. Its integration with BI tools like Looker or Data Studio further enables end-users to act on forecasts without having to navigate complex ML environments.

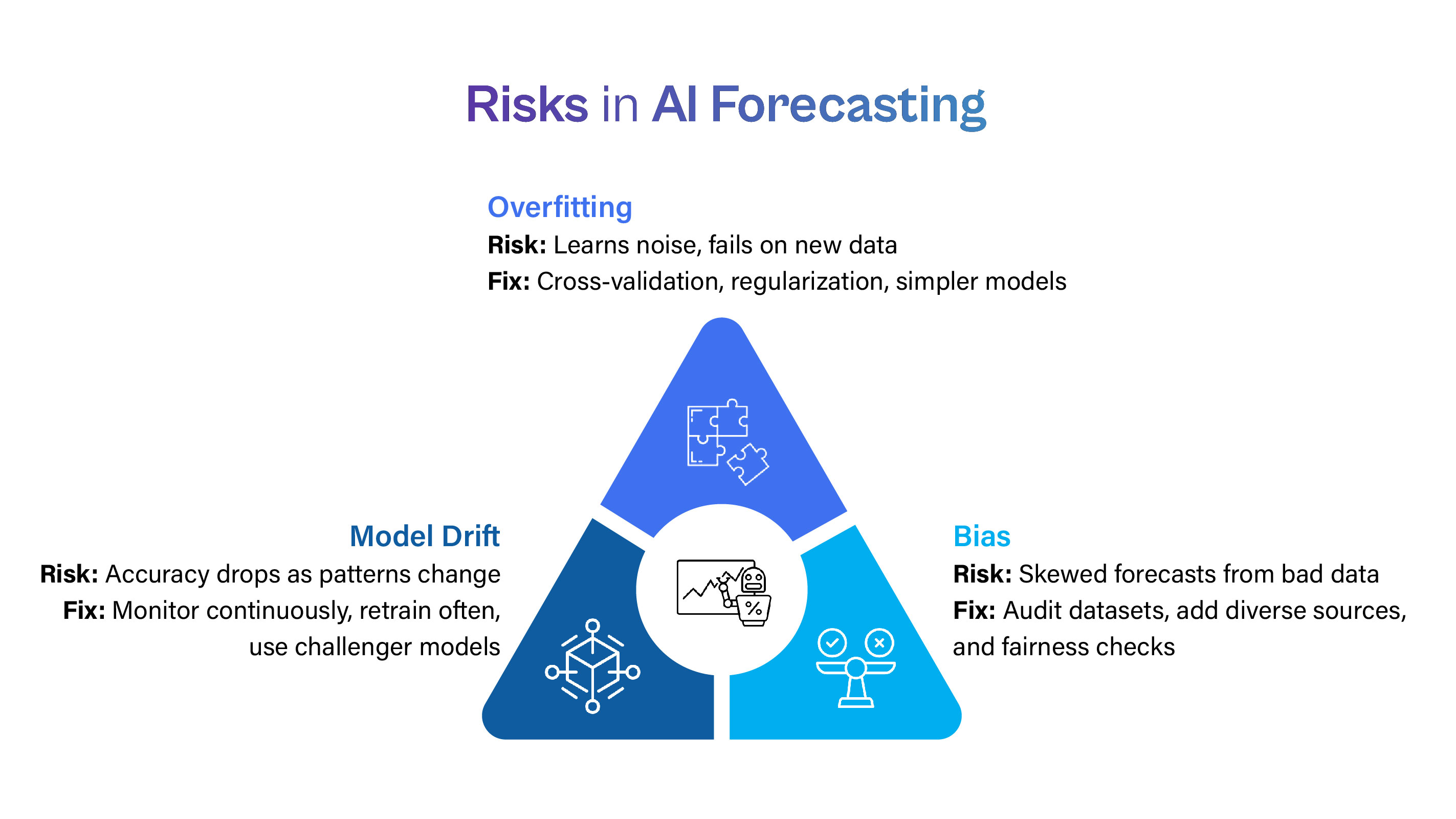

Yet, as powerful as these machine learning forecasting models are, they come with technical and operational risks that cannot be ignored.

Overfitting

Overfitting occurs when models capture noise rather than the underlying signal. DeepAR, with its high-capacity neural architecture, is particularly susceptible if hyperparameters are not tuned carefully or if the training dataset is too narrow. Overfitted models may perform exceptionally well on historical data but collapse when confronted with unseen conditions such as sudden supply chain disruptions or unexpected market shifts.

Bias

Bias is another persistent risk. In forecasting, bias can creep in through skewed historical data (e.g., past sales that reflect stockouts rather than true demand) or through features that inadvertently encode socioeconomic disparities. Facebook Prophet’s explicit inclusion of holiday effects, for example, could lead to bias if those holidays are not representative of all target markets.

Model Drift

Model drift represents a more insidious challenge. Over time, the statistical properties of data can change, a phenomenon known as concept drift. In energy demand forecasting, for example, the rapid adoption of EV charging infrastructure might alter consumption patterns in ways that models trained on pre-EV adoption data cannot anticipate. Without continuous retraining or monitoring, even well-calibrated models like those in BigQuery ML can silently degrade, delivering increasingly inaccurate predictions.

Mitigating these risks requires a governance framework that combines automated monitoring with human oversight. Techniques like backtesting on rolling windows, bias detection through counterfactual analysis, and drift detection using metrics like the Population Stability Index (PSI) can help ensure that AI forecasting systems remain robust. Equally important is embedding explainability into the workflow. Tools like SHAP (SHapley Additive exPlanations) can provide visibility into feature contributions, enabling business teams to trust, challenge, and refine model outputs before operational decisions are made.

In practice, the organizations that succeed with AI in forecasting using tools like Prophet, DeepAR, and BigQuery ML are those that treat these operational AI tools not as “install and forget” solutions, but as evolving components in a living ecosystem, continuously tuned, monitored, and aligned with business realities.

Forecast Smarter, Operate Faster

From airport turnarounds to energy grids, AI-driven forecasting is no longer optional, it’s the backbone of real-time decision-making. Neural networks, streaming pipelines, and cloud-native tools are already transforming how leading enterprises plan and execute.

Discover how Cogent Infotech can help you embed operational AI into your business workflows and stay ahead of disruption.

Talk to Our AI Experts

%402x.svg)

.jpg)